Yocto Project Software Development Kit (SDK) Developer's Guide

Copyright © 2010-2016 Linux Foundation

Permission is granted to copy, distribute and/or modify this document under the terms of the Creative Commons Attribution-Share Alike 2.0 UK: England & Wales as published by Creative Commons.

Note

For the latest version of this manual associated with this Yocto Project release, see the Yocto Project Software Development Kit (SDK) Developer's Guide from the Yocto Project website.| Revision History | |

|---|---|

| Revision 2.1 | April 2016 |

| Released with the Yocto Project 2.1 Release. | |

Table of Contents

- 1. Introduction

- 2. Using the Standard SDK

- 3. Using the Extensible SDK

- 3.1. Setting Up to Use the Extensible SDK

- 3.2. Using

devtoolin Your SDK Workflow - 3.3. A Closer Look at

devtool add - 3.4. Working With Recipes

- 3.5. Restoring the Target Device to its Original State

- 3.6. Installing Additional Items Into the Extensible SDK

- 3.7. Updating the Extensible SDK

- 3.8. Creating a Derivative SDK With Additional Components

- A. Obtaining the SDK

- B. Customizing the SDK

- B.1. Configuring the Extensible SDK

- B.2. Adjusting the Extensible SDK to Suit Your Build System Setup

- B.3. Changing the Appearance of the Extensible SDK

- B.4. Providing Updates After Installing the Extensible SDK

- B.5. Providing Additional Installable Extensible SDK Content

- B.6. Minimizing the Size of the Extensible SDK Installer Download

Chapter 1. Introduction¶

Table of Contents

1.1. Introduction¶

Welcome to the Yocto Project Software Development Kit (SDK) Developer's Guide. This manual provides information that explains how to use both the standard Yocto Project SDK and an extensible SDK to develop applications and images using the Yocto Project. Additionally, the manual also provides information on how to use the popular Eclipse™ IDE as part of your application development workflow within the SDK environment.

Prior to the 2.0 Release of the Yocto Project, application development was primarily accomplished through the use of the Application Development Toolkit (ADT) and the availability of stand-alone cross-development toolchains and other tools. With the 2.1 Release of the Yocto Project, application development has transitioned to within a more traditional SDK and extensible SDK.

A standard SDK consists of the following:

Cross-Development Toolchain: This toolchain contains a compiler, debugger, and various miscellaneous tools.

Libraries, Headers, and Symbols: The libraries, headers, and symbols are specific to the image (i.e. they match the image).

Environment Setup Script: This

*.shfile, once run, sets up the cross-development environment by defining variables and preparing for SDK use.

You can use the standard SDK to independently develop and test code that is destined to run on some target machine.

An extensible SDK consists of everything that the standard SDK has plus tools that allow you to easily add new applications and libraries to an image, modify the source of an existing component, test changes on the target hardware, and easily integrate an application into the OpenEmbedded build system.

SDKs are completely self-contained.

The binaries are linked against their own copy of

libc, which results in no dependencies

on the target system.

To achieve this, the pointer to the dynamic loader is

configured at install time since that path cannot be dynamically

altered.

This is the reason for a wrapper around the

populate_sdk and

populate_sdk_ext archives.

Another feature for the SDKs is that only one set of cross-compiler

toolchain binaries are produced per architecture.

This feature takes advantage of the fact that the target hardware can

be passed to gcc as a set of compiler options.

Those options are set up by the environment script and contained in

variables such as

CC

and

LD.

This reduces the space needed for the tools.

Understand, however, that a sysroot is still needed for every target

since those binaries are target-specific.

The SDK development environment consists of the following:

The self-contained SDK, which is an architecture-specific cross-toolchain and matching sysroots (target and native) all built by the OpenEmbedded build system (e.g. the SDK). The toolchain and sysroots are based on a Metadata configuration and extensions, which allows you to cross-develop on the host machine for the target hardware.

The Quick EMUlator (QEMU), which lets you simulate target hardware. QEMU is not literally part of the SDK. You must build and include this emulator separately. However, QEMU plays an important role in the development process that revolves around use of the SDK.

The Eclipse IDE Yocto Plug-in. This plug-in is available for you if you are an Eclipse user. In the same manner as QEMU, the plug-in is not literally part of the SDK but is rather available for use as part of the development process.

Various user-space tools that greatly enhance your application development experience. These tools are also separate from the actual SDK but can be independently obtained and used in the development process.

1.1.1. The Cross-Development Toolchain¶

The Cross-Development Toolchain consists of a cross-compiler, cross-linker, and cross-debugger that are used to develop user-space applications for targeted hardware. This toolchain is created by running a toolchain installer script or through a Build Directory that is based on your Metadata configuration or extension for your targeted device. The cross-toolchain works with a matching target sysroot.

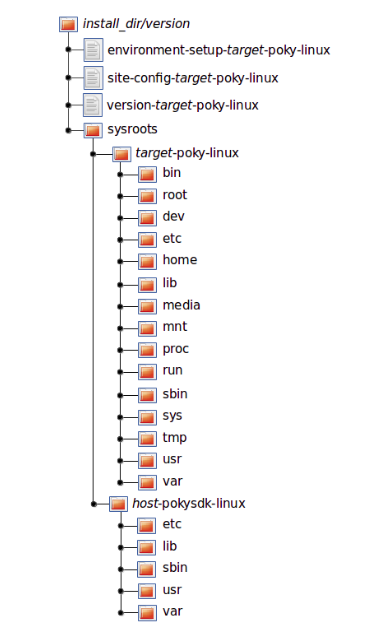

1.1.2. Sysroots¶

The native and target sysroots contain needed headers and libraries for generating binaries that run on the target architecture. The target sysroot is based on the target root filesystem image that is built by the OpenEmbedded build system and uses the same Metadata configuration used to build the cross-toolchain.

1.1.3. The QEMU Emulator¶

The QEMU emulator allows you to simulate your hardware while running your application or image. QEMU is not part of the SDK but is made available a number of ways:

If you have cloned the

pokyGit repository to create a Source Directory and you have sourced the environment setup script, QEMU is installed and automatically available.If you have downloaded a Yocto Project release and unpacked it to create a Source Directory and you have sourced the environment setup script, QEMU is installed and automatically available.

If you have installed the cross-toolchain tarball and you have sourced the toolchain's setup environment script, QEMU is also installed and automatically available.

1.1.4. Eclipse Yocto Plug-in¶

The Eclipse IDE is a popular development environment and it fully supports development using the Yocto Project. When you install and configure the Eclipse Yocto Project Plug-in into the Eclipse IDE, you maximize your Yocto Project experience. Installing and configuring the Plug-in results in an environment that has extensions specifically designed to let you more easily develop software. These extensions allow for cross-compilation, deployment, and execution of your output into a QEMU emulation session. You can also perform cross-debugging and profiling. The environment also supports a suite of tools that allows you to perform remote profiling, tracing, collection of power data, collection of latency data, and collection of performance data.

For information about the application development workflow that uses the Eclipse IDE and for a detailed example of how to install and configure the Eclipse Yocto Project Plug-in, see the "Developing Applications Using Eclipse™" section.

1.1.5. User-Space Tools¶

User-space tools, which are available as part of the SDK development environment, can be helpful. The tools include LatencyTOP, PowerTOP, Perf, SystemTap, and Lttng-ust. These tools are common development tools for the Linux platform.

LatencyTOP: LatencyTOP focuses on latency that causes skips in audio, stutters in your desktop experience, or situations that overload your server even when you have plenty of CPU power left.

PowerTOP: Helps you determine what software is using the most power. You can find out more about PowerTOP at https://01.org/powertop/.

Perf: Performance counters for Linux used to keep track of certain types of hardware and software events. For more information on these types of counters see https://perf.wiki.kernel.org/. For examples on how to setup and use this tool, see the "perf" section in the Yocto Project Profiling and Tracing Manual.

SystemTap: A free software infrastructure that simplifies information gathering about a running Linux system. This information helps you diagnose performance or functional problems. SystemTap is not available as a user-space tool through the Eclipse IDE Yocto Plug-in. See http://sourceware.org/systemtap for more information on SystemTap. For examples on how to setup and use this tool, see the "SystemTap" section in the Yocto Project Profiling and Tracing Manual.

Lttng-ust: A User-space Tracer designed to provide detailed information on user-space activity. See http://lttng.org/ust for more information on Lttng-ust.

1.2. SDK Development Model¶

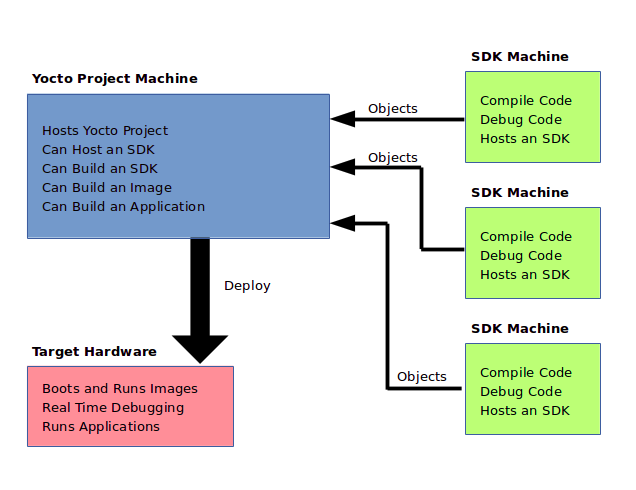

Fundamentally, the SDK fits into the development process as follows:

|

The SDK is installed on any machine and can be used to develop applications, images, and kernels. An SDK can even be used by a QA Engineer or Release Engineer. The fundamental concept is that the machine that has the SDK installed does not have to be associated with the machine that has the Yocto Project installed. A developer can independently compile and test an object on their machine and then, when the object is ready for integration into an image, they can simply make it available to the machine that has the Yocto Project. Once the object is available, the image can be rebuilt using the Yocto Project to produce the modified image.

You just need to follow these general steps:

Install the SDK for your target hardware: For information on how to install the SDK, see the "Installing the SDK" section.

Download the Target Image: The Yocto Project supports several target architectures and has many pre-built kernel images and root filesystem images.

If you are going to develop your application on hardware, go to the

machinesdownload area and choose a target machine area from which to download the kernel image and root filesystem. This download area could have several files in it that support development using actual hardware. For example, the area might contain.hddimgfiles that combine the kernel image with the filesystem, boot loaders, and so forth. Be sure to get the files you need for your particular development process.If you are going to develop your application and then run and test it using the QEMU emulator, go to the

machines/qemudownload area. From this area, go down into the directory for your target architecture (e.g.qemux86_64for an Intel®-based 64-bit architecture). Download kernel, root filesystem, and any other files you need for your process.Note

To use the root filesystem in QEMU, you need to extract it. See the "Extracting the Root Filesystem" section for information on how to extract the root filesystem.Develop and Test your Application: At this point, you have the tools to develop your application. If you need to separately install and use the QEMU emulator, you can go to QEMU Home Page to download and learn about the emulator. See the "Using the Quick EMUlator (QEMU)" chapter in the Yocto Project Development Manual for information on using QEMU within the Yocto Project.

The remainder of this manual describes how to use both the standard SDK and the extensible SDK. Information also exists in appendix form that describes how you can build, install, and modify an SDK.

Chapter 2. Using the Standard SDK¶

Table of Contents

This chapter describes the standard SDK and how to use it. Information covers the pieces of the SDK, how to install it, and presents several task-based procedures common for developing with a standard SDK.

Note

The tasks you can perform using a standard SDK are also applicable when you are using an extensible SDK. For information on the differences when using an extensible SDK as compared to a standard SDK, see the "Using the Extensible SDK" chapter.

2.1. Why use the Standard SDK and What is in It?¶

The Standard SDK provides a cross-development toolchain and libraries tailored to the contents of a specific image. You would use the Standard SDK if you want a more traditional toolchain experience.

The installed Standard SDK consists of several files and directories. Basically, it contains an SDK environment setup script, some configuration files, and host and target root filesystems to support usage. You can see the directory structure in the "Installed Standard SDK Directory Structure" section.

2.2. Installing the SDK¶

The first thing you need to do is install the SDK on your host

development machine by running the *.sh

installation script.

You can download a tarball installer, which includes the

pre-built toolchain, the runqemu

script, and support files from the appropriate directory under

http://downloads.yoctoproject.org/releases/yocto/yocto-2.1/toolchain/.

Toolchains are available for 32-bit and 64-bit x86 development

systems from the i686 and

x86_64 directories, respectively.

The toolchains the Yocto Project provides are based off the

core-image-sato image and contain

libraries appropriate for developing against that image.

Each type of development system supports five or more target

architectures.

The names of the tarball installer scripts are such that a string representing the host system appears first in the filename and then is immediately followed by a string representing the target architecture.

poky-glibc-host_system-image_type-arch-toolchain-release_version.sh

Where:

host_system is a string representing your development system:

i686 or x86_64.

image_type is the image for which the SDK was built.

arch is a string representing the tuned target architecture:

i586, x86_64, powerpc, mips, armv7a or armv5te

release_version is a string representing the release number of the

Yocto Project:

2.1, 2.1+snapshot

For example, the following toolchain installer is for a 64-bit

development host system and a i586-tuned target architecture

based off the SDK for core-image-sato and

using the current 2.1 snapshot:

poky-glibc-x86_64-core-image-sato-i586-toolchain-2.1.sh

The SDK and toolchains are self-contained and by default are installed

into /opt/poky.

However, when you run the SDK installer, you can choose an

installation directory.

Note

You must change the permissions on the toolchain installer script so that it is executable:

$ chmod +x poky-glibc-x86_64-core-image-sato-i586-toolchain-2.1.sh

The following command shows how to run the installer given a

toolchain tarball for a 64-bit x86 development host system and

a 32-bit x86 target architecture.

The example assumes the toolchain installer is located in

~/Downloads/.

Note

If you do not have write permissions for the directory into which you are installing the SDK, the installer notifies you and exits. Be sure you have write permissions in the directory and run the installer again.

$ ./poky-glibc-x86_64-core-image-sato-i586-toolchain-2.1.sh

Poky (Yocto Project Reference Distro) SDK installer version 2.0

===============================================================

Enter target directory for SDK (default: /opt/poky/2.1):

You are about to install the SDK to "/opt/poky/2.1". Proceed[Y/n]? Y

Extracting SDK.......................................................................done

Setting it up...done

SDK has been successfully set up and is ready to be used.

Each time you wish to use the SDK in a new shell session, you need to source the environment setup script e.g.

$ . /opt/poky/2.1/environment-setup-i586-poky-linux

Again, reference the "Installed Standard SDK Directory Structure" section for more details on the resulting directory structure of the installed SDK.

2.3. Running the SDK Environment Setup Script¶

Once you have the SDK installed, you must run the SDK environment setup script before you can actually use it. This setup script resides in the directory you chose when you installed the SDK. For information on where this setup script can reside, see the "Obtaining the SDK" Appendix.

Before running the script, be sure it is the one that matches the

architecture for which you are developing.

Environment setup scripts begin with the string

"environment-setup" and include as part of their

name the tuned target architecture.

For example, the command to source a setup script for an IA-based

target machine using i586 tuning and located in the default SDK

installation directory is as follows:

$ source /opt/poky/2.1/environment-setup-i586-poky-linux

When you run the setup script, many environment variables are defined:

SDKTARGETSYSROOT - The path to the sysroot used for cross-compilation

PKG_CONFIG_PATH - The path to the target pkg-config files

CONFIG_SITE - A GNU autoconf site file preconfigured for the target

CC - The minimal command and arguments to run the C compiler

CXX - The minimal command and arguments to run the C++ compiler

CPP - The minimal command and arguments to run the C preprocessor

AS - The minimal command and arguments to run the assembler

LD - The minimal command and arguments to run the linker

GDB - The minimal command and arguments to run the GNU Debugger

STRIP - The minimal command and arguments to run 'strip', which strips symbols

RANLIB - The minimal command and arguments to run 'ranlib'

OBJCOPY - The minimal command and arguments to run 'objcopy'

OBJDUMP - The minimal command and arguments to run 'objdump'

AR - The minimal command and arguments to run 'ar'

NM - The minimal command and arguments to run 'nm'

TARGET_PREFIX - The toolchain binary prefix for the target tools

CROSS_COMPILE - The toolchain binary prefix for the target tools

CONFIGURE_FLAGS - The minimal arguments for GNU configure

CFLAGS - Suggested C flags

CXXFLAGS - Suggested C++ flags

LDFLAGS - Suggested linker flags when you use CC to link

CPPFLAGS - Suggested preprocessor flags

2.4. Autotools-Based Projects¶

Once you have a suitable cross-toolchain installed, it is very easy to develop a project outside of the OpenEmbedded build system. This section presents a simple "Helloworld" example that shows how to set up, compile, and run the project.

2.4.1. Creating and Running a Project Based on GNU Autotools¶

Follow these steps to create a simple Autotools-based project:

Create your directory: Create a clean directory for your project and then make that directory your working location:

$ mkdir $HOME/helloworld $ cd $HOME/helloworldPopulate the directory: Create

hello.c,Makefile.am, andconfigure.infiles as follows:For

hello.c, include these lines:#include <stdio.h> main() { printf("Hello World!\n"); }For

Makefile.am, include these lines:bin_PROGRAMS = hello hello_SOURCES = hello.cFor

configure.in, include these lines:AC_INIT(hello.c) AM_INIT_AUTOMAKE(hello,0.1) AC_PROG_CC AC_PROG_INSTALL AC_OUTPUT(Makefile)

Source the cross-toolchain environment setup file: Installation of the cross-toolchain creates a cross-toolchain environment setup script in the directory that the SDK was installed. Before you can use the tools to develop your project, you must source this setup script. The script begins with the string "environment-setup" and contains the machine architecture, which is followed by the string "poky-linux". Here is an example that sources a script from the default SDK installation directory that uses the 32-bit Intel x86 Architecture and the Krogoth Yocto Project release:

$ source /opt/poky/2.1/environment-setup-i586-poky-linuxGenerate the local aclocal.m4 files and create the configure script: The following GNU Autotools generate the local

aclocal.m4files and create the configure script:$ aclocal $ autoconfGenerate files needed by GNU coding standards: GNU coding standards require certain files in order for the project to be compliant. This command creates those files:

$ touch NEWS README AUTHORS ChangeLogGenerate the configure file: This command generates the

configure:$ automake -aCross-compile the project: This command compiles the project using the cross-compiler. The

CONFIGURE_FLAGSenvironment variable provides the minimal arguments for GNU configure:$ ./configure ${CONFIGURE_FLAGS}Make and install the project: These two commands generate and install the project into the destination directory:

$ make $ make install DESTDIR=./tmpVerify the installation: This command is a simple way to verify the installation of your project. Running the command prints the architecture on which the binary file can run. This architecture should be the same architecture that the installed cross-toolchain supports.

$ file ./tmp/usr/local/bin/helloExecute your project: To execute the project in the shell, simply enter the name. You could also copy the binary to the actual target hardware and run the project there as well:

$ ./helloAs expected, the project displays the "Hello World!" message.

2.4.2. Passing Host Options¶

For an Autotools-based project, you can use the cross-toolchain by just

passing the appropriate host option to configure.sh.

The host option you use is derived from the name of the environment setup

script found in the directory in which you installed the cross-toolchain.

For example, the host option for an ARM-based target that uses the GNU EABI

is armv5te-poky-linux-gnueabi.

You will notice that the name of the script is

environment-setup-armv5te-poky-linux-gnueabi.

Thus, the following command works to update your project and

rebuild it using the appropriate cross-toolchain tools:

$ ./configure --host=armv5te-poky-linux-gnueabi \

--with-libtool-sysroot=sysroot_dir

Note

If theconfigure script results in problems recognizing the

--with-libtool-sysroot=sysroot-dir option,

regenerate the script to enable the support by doing the following and then

run the script again:

$ libtoolize --automake

$ aclocal -I ${OECORE_NATIVE_SYSROOT}/usr/share/aclocal \

[-I dir_containing_your_project-specific_m4_macros]

$ autoconf

$ autoheader

$ automake -a

2.5. Makefile-Based Projects¶

For Makefile-based projects, the cross-toolchain environment variables

established by running the cross-toolchain environment setup script

are subject to general make rules.

To illustrate this, consider the following four cross-toolchain environment variables:

CC=i586-poky-linux-gcc -m32 -march=i586 --sysroot=/opt/poky/2.1/sysroots/i586-poky-linux

LD=i586-poky-linux-ld --sysroot=/opt/poky/2.1/sysroots/i586-poky-linux

CFLAGS=-O2 -pipe -g -feliminate-unused-debug-types

CXXFLAGS=-O2 -pipe -g -feliminate-unused-debug-types

Now, consider the following three cases:

Case 1 - No Variables Set in the

Makefile: Because these variables are not specifically set in theMakefile, the variables retain their values based on the environment.Case 2 - Variables Set in the

Makefile: Specifically setting variables in theMakefileduring the build results in the environment settings of the variables being overwritten.Case 3 - Variables Set when the

Makefileis Executed from the Command Line: Executing theMakefilefrom the command line results in the variables being overwritten with command-line content regardless of what is being set in theMakefile. In this case, environment variables are not considered unless you use the "-e" flag during the build:$ make -efileIf you use this flag, then the environment values of the variables override any variables specifically set in the

Makefile.

Note

For the list of variables set up by the cross-toolchain environment setup script, see the "Running the SDK Environment Setup Script" section.

2.6. Developing Applications Using Eclipse™¶

If you are familiar with the popular Eclipse IDE, you can use an Eclipse Yocto Plug-in to allow you to develop, deploy, and test your application all from within Eclipse. This section describes general workflow using the SDK and Eclipse and how to configure and set up Eclipse.

2.6.1. Workflow Using Eclipse™¶

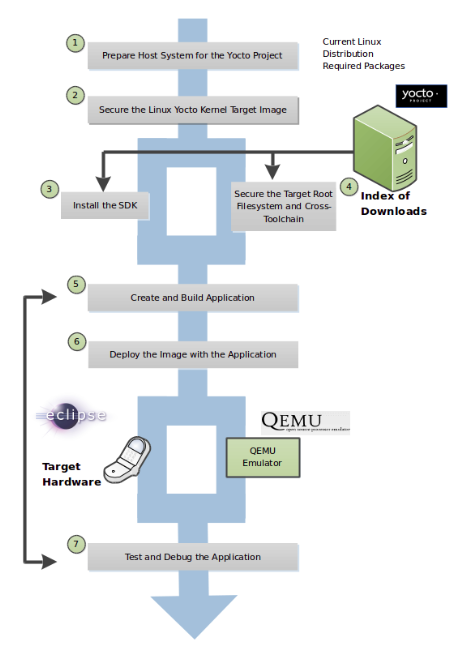

The following figure and supporting list summarize the application development general workflow that employs both the SDK Eclipse.

|

Prepare the host system for the Yocto Project: See "Supported Linux Distributions" and "Required Packages for the Host Development System" sections both in the Yocto Project Reference Manual for requirements. In particular, be sure your host system has the

xtermpackage installed.Secure the Yocto Project kernel target image: You must have a target kernel image that has been built using the OpenEmbedded build system.

Depending on whether the Yocto Project has a pre-built image that matches your target architecture and where you are going to run the image while you develop your application (QEMU or real hardware), the area from which you get the image differs.

Download the image from

machinesif your target architecture is supported and you are going to develop and test your application on actual hardware.Download the image from

machines/qemuif your target architecture is supported and you are going to develop and test your application using the QEMU emulator.Build your image if you cannot find a pre-built image that matches your target architecture. If your target architecture is similar to a supported architecture, you can modify the kernel image before you build it. See the "Patching the Kernel" section in the Yocto Project Development manual for an example.

For information on pre-built kernel image naming schemes for images that can run on the QEMU emulator, see the Yocto Project Software Development Kit (SDK) Developer's Guide.

Install the SDK: The SDK provides a target-specific cross-development toolchain, the root filesystem, the QEMU emulator, and other tools that can help you develop your application. For information on how to install the SDK, see the "Installing the SDK" section.

Secure the target root filesystem and the Cross-development toolchain: You need to find and download the appropriate root filesystem and the cross-development toolchain.

You can find the tarballs for the root filesystem in the same area used for the kernel image. Depending on the type of image you are running, the root filesystem you need differs. For example, if you are developing an application that runs on an image that supports Sato, you need to get a root filesystem that supports Sato.

You can find the cross-development toolchains at

toolchains. Be sure to get the correct toolchain for your development host and your target architecture. See the "Locating Pre-Built SDK Installers" section for information and the "Installing the SDK" section for installation information.Create and build your application: At this point, you need to have source files for your application. Once you have the files, you can use the Eclipse IDE to import them and build the project. If you are not using Eclipse, you need to use the cross-development tools you have installed to create the image.

Deploy the image with the application: If you are using the Eclipse IDE, you can deploy your image to the hardware or to QEMU through the project's preferences. If you are not using the Eclipse IDE, then you need to deploy the application to the hardware using other methods. Or, if you are using QEMU, you need to use that tool and load your image in for testing. See the "Using the Quick EMUlator (QEMU)" chapter in the Yocto Project Development Manual for information on using QEMU.

Test and debug the application: Once your application is deployed, you need to test it. Within the Eclipse IDE, you can use the debugging environment along with the set of installed user-space tools to debug your application. Of course, the same user-space tools are available separately if you choose not to use the Eclipse IDE.

2.6.2. Working Within Eclipse¶

The Eclipse IDE is a popular development environment and it fully supports development using the Yocto Project.

Note

This release of the Yocto Project supports both the Luna and Kepler versions of the Eclipse IDE. Thus, the following information provides setup information for both versions.

When you install and configure the Eclipse Yocto Project Plug-in into the Eclipse IDE, you maximize your Yocto Project experience. Installing and configuring the Plug-in results in an environment that has extensions specifically designed to let you more easily develop software. These extensions allow for cross-compilation, deployment, and execution of your output into a QEMU emulation session as well as actual target hardware. You can also perform cross-debugging and profiling. The environment also supports a suite of tools that allows you to perform remote profiling, tracing, collection of power data, collection of latency data, and collection of performance data.

This section describes how to install and configure the Eclipse IDE Yocto Plug-in and how to use it to develop your application.

2.6.2.1. Setting Up the Eclipse IDE¶

To develop within the Eclipse IDE, you need to do the following:

Install the optimal version of the Eclipse IDE.

Configure the Eclipse IDE.

Install the Eclipse Yocto Plug-in.

Configure the Eclipse Yocto Plug-in.

Note

Do not install Eclipse from your distribution's package repository. Be sure to install Eclipse from the official Eclipse download site as directed in the next section.

2.6.2.1.1. Installing the Eclipse IDE¶

It is recommended that you have the Luna SR2 (4.4.2) version of the Eclipse IDE installed on your development system. However, if you currently have the Kepler 4.3.2 version installed and you do not want to upgrade the IDE, you can configure Kepler to work with the Yocto Project.

If you do not have the Luna SR2 (4.4.2) Eclipse IDE installed, you can find the tarball at http://www.eclipse.org/downloads. From that site, choose the appropriate download from the "Eclipse IDE for C/C++ Developers". This version contains the Eclipse Platform, the Java Development Tools (JDT), and the Plug-in Development Environment.

Once you have downloaded the tarball, extract it into a

clean directory.

For example, the following commands unpack and install the

downloaded Eclipse IDE tarball into a clean directory

using the default name eclipse:

$ cd ~

$ tar -xzvf ~/Downloads/eclipse-cpp-luna-SR2-linux-gtk-x86_64.tar.gz

2.6.2.1.2. Configuring the Eclipse IDE¶

This section presents the steps needed to configure the Eclipse IDE.

Before installing and configuring the Eclipse Yocto Plug-in, you need to configure the Eclipse IDE. Follow these general steps:

Start the Eclipse IDE.

Make sure you are in your Workbench and select "Install New Software" from the "Help" pull-down menu.

Select

Luna - http://download.eclipse.org/releases/lunafrom the "Work with:" pull-down menu.Note

For Kepler, selectKepler - http://download.eclipse.org/releases/keplerExpand the box next to "Linux Tools" and select the

Linux Tools LTTng Tracer Control,Linux Tools LTTng Userspace Analysis, andLTTng Kernel Analysisboxes. If these selections do not appear in the list, that means the items are already installed.Note

For Kepler, selectLTTng - Linux Tracing Toolkitbox.Expand the box next to "Mobile and Device Development" and select the following boxes. Again, if any of the following items are not available for selection, that means the items are already installed:

C/C++ Remote Launch (Requires RSE Remote System Explorer)Remote System Explorer End-user RuntimeRemote System Explorer User ActionsTarget Management Terminal (Core SDK)TCF Remote System Explorer add-inTCF Target Explorer

Expand the box next to "Programming Languages" and select the

C/C++ Autotools SupportandC/C++ Development Toolsboxes. For Luna, these items do not appear on the list as they are already installed.Complete the installation and restart the Eclipse IDE.

2.6.2.1.3. Installing or Accessing the Eclipse Yocto Plug-in¶

You can install the Eclipse Yocto Plug-in into the Eclipse IDE one of two ways: use the Yocto Project's Eclipse Update site to install the pre-built plug-in or build and install the plug-in from the latest source code.

2.6.2.1.3.1. Installing the Pre-built Plug-in from the Yocto Project Eclipse Update Site¶

To install the Eclipse Yocto Plug-in from the update site, follow these steps:

Start up the Eclipse IDE.

In Eclipse, select "Install New Software" from the "Help" menu.

Click "Add..." in the "Work with:" area.

Enter

http://downloads.yoctoproject.org/releases/eclipse-plugin/2.1/lunain the URL field and provide a meaningful name in the "Name" field.Note

If you are using Kepler, usehttp://downloads.yoctoproject.org/releases/eclipse-plugin/2.1/keplerin the URL field.Click "OK" to have the entry added to the "Work with:" drop-down list.

Select the entry for the plug-in from the "Work with:" drop-down list.

Check the boxes next to

Yocto Project ADT Plug-in,Yocto Project Bitbake Commander Plug-in, andYocto Project Documentation plug-in.Complete the remaining software installation steps and then restart the Eclipse IDE to finish the installation of the plug-in.

Note

You can click "OK" when prompted about installing software that contains unsigned content.

2.6.2.1.3.2. Installing the Plug-in Using the Latest Source Code¶

To install the Eclipse Yocto Plug-in from the latest source code, follow these steps:

Be sure your development system is not using OpenJDK to build the plug-in by doing the following:

Use the Oracle JDK. If you don't have that, go to http://www.oracle.com/technetwork/java/javase/downloads/jdk7-downloads-1880260.html and download the latest appropriate Java SE Development Kit tarball for your development system and extract it into your home directory.

In the shell you are going to do your work, export the location of the Oracle Java. The previous step creates a new folder for the extracted software. You need to use the following

exportcommand and provide the specific location:export PATH=~/extracted_jdk_location/bin:$PATH

In the same shell, create a Git repository with:

$ cd ~ $ git clone git://git.yoctoproject.org/eclipse-pokyBe sure to checkout the correct tag. For example, if you are using Luna, do the following:

$ git checkout luna/yocto-2.1This puts you in a detached HEAD state, which is fine since you are only going to be building and not developing.

Note

If you are building kepler, checkout thekepler/yocto-2.1branch.Change to the

scriptsdirectory within the Git repository:$ cd scriptsSet up the local build environment by running the setup script:

$ ./setup.shWhen the script finishes execution, it prompts you with instructions on how to run the

build.shscript, which is also in thescriptsdirectory of the Git repository created earlier.Run the

build.shscript as directed. Be sure to provide the tag name, documentation branch, and a release name. Here is an example that uses theluna/yocto-2.1tag, themasterdocumentation branch, andkrogothfor the release name:$ ECLIPSE_HOME=/home/scottrif/eclipse-poky/scripts/eclipse ./build.sh luna/yocto-2.1 master krogoth 2>&1 | tee -a build.logAfter running the script, the file

org.yocto.sdk-release-date-archive.zipis in the current directory.If necessary, start the Eclipse IDE and be sure you are in the Workbench.

Select "Install New Software" from the "Help" pull-down menu.

Click "Add".

Provide anything you want in the "Name" field.

Click "Archive" and browse to the ZIP file you built in step eight. This ZIP file should not be "unzipped", and must be the

*archive.zipfile created by running thebuild.shscript.Click the "OK" button.

Check the boxes that appear in the installation window to install the

Yocto Project ADT Plug-in,Yocto Project Bitbake Commander Plug-in, and theYocto Project Documentation plug-in.Finish the installation by clicking through the appropriate buttons. You can click "OK" when prompted about installing software that contains unsigned content.

Restart the Eclipse IDE if necessary.

At this point you should be able to configure the Eclipse Yocto Plug-in as described in the "Configuring the Eclipse Yocto Plug-in" section.

2.6.2.1.4. Configuring the Eclipse Yocto Plug-in¶

Configuring the Eclipse Yocto Plug-in involves setting the Cross Compiler options and the Target options. The configurations you choose become the default settings for all projects. You do have opportunities to change them later when you configure the project (see the following section).

To start, you need to do the following from within the Eclipse IDE:

Choose "Preferences" from the "Window" menu to display the Preferences Dialog.

Click "Yocto Project ADT" to display the configuration screen.

2.6.2.1.4.1. Configuring the Cross-Compiler Options¶

To configure the Cross Compiler Options, you must select the type of toolchain, point to the toolchain, specify the sysroot location, and select the target architecture.

Selecting the Toolchain Type: Choose between

Standalone pre-built toolchainandBuild system derived toolchainfor Cross Compiler Options.Standalone Pre-built Toolchain:Select this mode when you are using a stand-alone cross-toolchain. For example, suppose you are an application developer and do not need to build a target image. Instead, you just want to use an architecture-specific toolchain on an existing kernel and target root filesystem.Build System Derived Toolchain:Select this mode if the cross-toolchain has been installed and built as part of the Build Directory. When you selectBuild system derived toolchain, you are using the toolchain bundled inside the Build Directory.

Point to the Toolchain: If you are using a stand-alone pre-built toolchain, you should be pointing to where it is installed. See the "Installing the SDK" section for information about how the SDK is installed.

If you are using a system-derived toolchain, the path you provide for the

Toolchain Root Locationfield is the Build Directory. See the "Building an SDK Installer" section.Specify the Sysroot Location: This location is where the root filesystem for the target hardware resides.

The location of the sysroot filesystem depends on where you separately extracted and installed the filesystem.

For information on how to install the toolchain and on how to extract and install the sysroot filesystem, see the "Building an SDK Installer" section.

Select the Target Architecture: The target architecture is the type of hardware you are going to use or emulate. Use the pull-down

Target Architecturemenu to make your selection. The pull-down menu should have the supported architectures. If the architecture you need is not listed in the menu, you will need to build the image. See the "Building Images" section of the Yocto Project Quick Start for more information.

2.6.2.1.4.2. Configuring the Target Options¶

You can choose to emulate hardware using the QEMU emulator, or you can choose to run your image on actual hardware.

QEMU: Select this option if you will be using the QEMU emulator. If you are using the emulator, you also need to locate the kernel and specify any custom options.

If you selected

Build system derived toolchain, the target kernel you built will be located in the Build Directory intmp/deploy/images/directory. If you selectedmachineStandalone pre-built toolchain, the pre-built image you downloaded is located in the directory you specified when you downloaded the image.Most custom options are for advanced QEMU users to further customize their QEMU instance. These options are specified between paired angled brackets. Some options must be specified outside the brackets. In particular, the options

serial,nographic, andkvmmust all be outside the brackets. Use theman qemucommand to get help on all the options and their use. The following is an example:serial ‘<-m 256 -full-screen>’Regardless of the mode, Sysroot is already defined as part of the Cross-Compiler Options configuration in the

Sysroot Location:field.External HW: Select this option if you will be using actual hardware.

Click the "OK" to save your plug-in configurations.

2.6.2.2. Creating the Project¶

You can create two types of projects: Autotools-based, or Makefile-based. This section describes how to create Autotools-based projects from within the Eclipse IDE. For information on creating Makefile-based projects in a terminal window, see the "Makefile-Based Projects" section.

Note

Do not use special characters in project names (e.g. spaces, underscores, etc.). Doing so can cause configuration to fail.

To create a project based on a Yocto template and then display the source code, follow these steps:

Select "Project" from the "File -> New" menu.

Double click

CC++.Double click

C Projectto create the project.Expand

Yocto Project ADT Autotools Project.Select

Hello World ANSI C Autotools Project. This is an Autotools-based project based on a Yocto template.Put a name in the

Project name:field. Do not use hyphens as part of the name.Click "Next".

Add information in the

AuthorandCopyright noticefields.Be sure the

Licensefield is correct.Click "Finish".

If the "open perspective" prompt appears, click "Yes" so that you in the C/C++ perspective.

The left-hand navigation pane shows your project. You can display your source by double clicking the project's source file.

2.6.2.3. Configuring the Cross-Toolchains¶

The earlier section, "Configuring the Eclipse Yocto Plug-in", sets up the default project configurations. You can override these settings for a given project by following these steps:

Select "Change Yocto Project Settings" from the "Project" menu. This selection brings up the Yocto Project Settings Dialog and allows you to make changes specific to an individual project.

By default, the Cross Compiler Options and Target Options for a project are inherited from settings you provided using the Preferences Dialog as described earlier in the "Configuring the Eclipse Yocto Plug-in" section. The Yocto Project Settings Dialog allows you to override those default settings for a given project.

Make your configurations for the project and click "OK".

Right-click in the navigation pane and select "Reconfigure Project" from the pop-up menu. This selection reconfigures the project by running

autogen.shin the workspace for your project. The script also runslibtoolize,aclocal,autoconf,autoheader,automake --a, and./configure. Click on the "Console" tab beneath your source code to see the results of reconfiguring your project.

2.6.2.4. Building the Project¶

To build the project select "Build Project" from the "Project" menu. The console should update and you can note the cross-compiler you are using.

Note

When building "Yocto Project ADT Autotools" projects, the Eclipse IDE might display error messages for Functions/Symbols/Types that cannot be "resolved", even when the related include file is listed at the project navigator and when the project is able to build. For these cases only, it is recommended to add a new linked folder to the appropriate sysroot. Use these steps to add the linked folder:Select the project.

Select "Folder" from the

File > Newmenu.In the "New Folder" Dialog, select "Link to alternate location (linked folder)".

Click "Browse" to navigate to the include folder inside the same sysroot location selected in the Yocto Project configuration preferences.

Click "OK".

Click "Finish" to save the linked folder.

2.6.2.5. Starting QEMU in User-Space NFS Mode¶

To start the QEMU emulator from within Eclipse, follow these steps:

Note

See the "Using the Quick EMUlator (QEMU)" chapter in the Yocto Project Development Manual for more information on using QEMU.

Expose and select "External Tools" from the "Run" menu. Your image should appear as a selectable menu item.

Select your image from the menu to launch the emulator in a new window.

If needed, enter your host root password in the shell window at the prompt. This sets up a

Tap 0connection needed for running in user-space NFS mode.Wait for QEMU to launch.

Once QEMU launches, you can begin operating within that environment. One useful task at this point would be to determine the IP Address for the user-space NFS by using the

ifconfigcommand.

2.6.2.6. Deploying and Debugging the Application¶

Once the QEMU emulator is running the image, you can deploy your application using the Eclipse IDE and then use the emulator to perform debugging. Follow these steps to deploy the application.

Note

Currently, Eclipse does not support SSH port forwarding. Consequently, if you need to run or debug a remote application using the host display, you must create a tunneling connection from outside Eclipse and keep that connection alive during your work. For example, in a new terminal, run the following:

ssh -XY user_name@remote_host_ip

After running the command, add the command to be executed

in Eclipse's run configuration before the application

as follows:

export DISPLAY=:10.0

Select "Debug Configurations..." from the "Run" menu.

In the left area, expand

C/C++Remote Application.Locate your project and select it to bring up a new tabbed view in the Debug Configurations Dialog.

Enter the absolute path into which you want to deploy the application. Use the "Remote Absolute File Path for C/C++Application:" field. For example, enter

/usr/bin/.programnameClick on the "Debugger" tab to see the cross-tool debugger you are using.

Click on the "Main" tab.

Create a new connection to the QEMU instance by clicking on "new".

Select

TCF, which means Target Communication Framework.Click "Next".

Clear out the "host name" field and enter the IP Address determined earlier.

Click "Finish" to close the New Connections Dialog.

Use the drop-down menu now in the "Connection" field and pick the IP Address you entered.

Click "Debug" to bring up a login screen and login.

Accept the debug perspective.

2.6.2.7. Running User-Space Tools¶

As mentioned earlier in the manual, several tools exist that enhance your development experience. These tools are aids in developing and debugging applications and images. You can run these user-space tools from within the Eclipse IDE through the "YoctoProjectTools" menu.

Once you pick a tool, you need to configure it for the remote target. Every tool needs to have the connection configured. You must select an existing TCF-based RSE connection to the remote target. If one does not exist, click "New" to create one.

Here are some specifics about the remote tools:

Lttng2.0 trace import: Selecting this tool transfers the remote target'sLttngtracing data back to the local host machine and uses the Lttng Eclipse plug-in to graphically display the output. For information on how to use Lttng to trace an application, see http://lttng.org/documentation and the "LTTng (Linux Trace Toolkit, next generation)" section, which is in the Yocto Project Profiling and Tracing Manual.Note

Do not useLttng-user space (legacy)tool. This tool no longer has any upstream support.Before you use the

Lttng2.0 trace importtool, you need to setup the Lttng Eclipse plug-in and create a Tracing project. Do the following:Select "Open Perspective" from the "Window" menu and then select "Other..." to bring up a menu of other perspectives. Choose "Tracing".

Click "OK" to change the Eclipse perspective into the Tracing perspective.

Create a new Tracing project by selecting "Project" from the "File -> New" menu.

Choose "Tracing Project" from the "Tracing" menu and click "Next".

Provide a name for your tracing project and click "Finish".

Generate your tracing data on the remote target.

Select "Lttng2.0 trace import" from the "Yocto Project Tools" menu to start the data import process.

Specify your remote connection name.

For the Ust directory path, specify the location of your remote tracing data. Make sure the location ends with

ust(e.g./usr/mysession/ust).Click "OK" to complete the import process. The data is now in the local tracing project you created.

Right click on the data and then use the menu to Select "Generic CTF Trace" from the "Trace Type... -> Common Trace Format" menu to map the tracing type.

Right click the mouse and select "Open" to bring up the Eclipse Lttng Trace Viewer so you view the tracing data.

PowerTOP: Selecting this tool runs PowerTOP on the remote target machine and displays the results in a new view called PowerTOP.The "Time to gather data(sec):" field is the time passed in seconds before data is gathered from the remote target for analysis.

The "show pids in wakeups list:" field corresponds to the

-pargument passed toPowerTOP.LatencyTOP and Perf: LatencyTOP identifies system latency, while Perf monitors the system's performance counter registers. Selecting either of these tools causes an RSE terminal view to appear from which you can run the tools. Both tools refresh the entire screen to display results while they run. For more information on setting up and usingperf, see the "perf" section in the Yocto Project Profiling and Tracing Manual.SystemTap: Systemtap is a tool that lets you create and reuse scripts to examine the activities of a live Linux system. You can easily extract, filter, and summarize data that helps you diagnose complex performance or functional problems. For more information on setting up and usingSystemTap, see the SystemTap Documentation.yocto-bsp: Theyocto-bsptool lets you quickly set up a Board Support Package (BSP) layer. The tool requires a Metadata location, build location, BSP name, BSP output location, and a kernel architecture. For more information on theyocto-bsptool outside of Eclipse, see the "Creating a new BSP Layer Using the yocto-bsp Script" section in the Yocto Project Board Support Package (BSP) Developer's Guide.

Chapter 3. Using the Extensible SDK¶

Table of Contents

- 3.1. Setting Up to Use the Extensible SDK

- 3.2. Using

devtoolin Your SDK Workflow - 3.3. A Closer Look at

devtool add - 3.4. Working With Recipes

- 3.5. Restoring the Target Device to its Original State

- 3.6. Installing Additional Items Into the Extensible SDK

- 3.7. Updating the Extensible SDK

- 3.8. Creating a Derivative SDK With Additional Components

This chapter describes the extensible SDK and how to use it. The extensible SDK makes it easy to add new applications and libraries to an image, modify the source for an existing component, test changes on the target hardware, and ease integration into the rest of the OpenEmbedded build system.

Information in this chapter covers features that are not part of the standard SDK. In other words, the chapter presents information unique to the extensible SDK only. For information on how to use the standard SDK, see the "Using the Standard SDK" chapter.

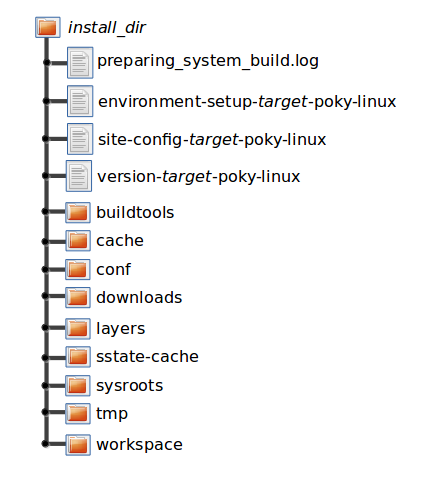

3.1. Setting Up to Use the Extensible SDK¶

Getting set up to use the extensible SDK is identical to getting set up to use the standard SDK. You still need to locate and run the installer and then run the environment setup script. See the "Installing the SDK" and the "Running the SDK Environment Setup Script" sections for general information. The following items highlight the only differences between getting set up to use the extensible SDK as compared to the standard SDK:

Default Installation Directory: By default, the extensible SDK installs into the

poky_sdkfolder of your home directory. As with the standard SDK, you can choose to install the extensible SDK in any location when you run the installer. However, unlike the standard SDK, the location you choose needs to be writable for whichever users need to use the SDK, since files will need to be written under that directory during the normal course of operation.Build Tools and Build System: The extensible SDK installer performs additional tasks as compared to the standard SDK installer. The extensible SDK installer extracts build tools specific to the SDK and the installer also prepares the internal build system within the SDK. Here is example output for running the extensible SDK installer:

$ ./poky-glibc-x86_64-core-image-minimal-core2-64-toolchain-ext-2.1+snapshot.sh Poky (Yocto Project Reference Distro) Extensible SDK installer version 2.1+snapshot =================================================================================== Enter target directory for SDK (default: ~/poky_sdk): You are about to install the SDK to "/home/scottrif/poky_sdk". Proceed[Y/n]? Y Extracting SDK......................................................................done Setting it up... Extracting buildtools... Preparing build system... done SDK has been successfully set up and is ready to be used. Each time you wish to use the SDK in a new shell session, you need to source the environment setup script e.g. $ . /home/scottrif/poky_sdk/environment-setup-core2-64-poky-linux

After installing the SDK, you need to run the SDK environment setup script. Here is the output:

$ source environment-setup-core2-64-poky-linux

SDK environment now set up; additionally you may now run devtool to perform development tasks.

Run devtool --help for further details.

Once you run the environment setup script, you have

devtool available.

3.2. Using devtool in Your SDK Workflow¶

The cornerstone of the extensible SDK is a command-line tool

called devtool.

This tool provides a number of features that help

you build, test and package software within the extensible SDK, and

optionally integrate it into an image built by the OpenEmbedded build

system.

The devtool command line is organized similarly

to

Git in that it has a

number of sub-commands for each function.

You can run devtool --help to see all the

commands.

Two devtool subcommands that provide

entry-points into development are:

devtool add: Assists in adding new software to be built.devtool modify: Sets up an environment to enable you to modify the source of an existing component.

As with the OpenEmbedded build system, "recipes" represent software

packages within devtool.

When you use devtool add, a recipe is

automatically created.

When you use devtool modify, the specified

existing recipe is used in order to determine where to get the source

code and how to patch it.

In both cases, an environment is set up so that when you build the

recipe a source tree that is under your control is used in order to

allow you to make changes to the source as desired.

By default, both new recipes and the source go into a "workspace"

directory under the SDK.

The remainder of this section presents the

devtool add and

devtool modify workflows.

3.2.1. Use devtool add to Add an Application¶

The devtool add command generates

a new recipe based on existing source code.

This command takes advantage of the

workspace

layer that many devtool commands

use.

The command is flexible enough to allow you to extract source

code into both the workspace or a separate local Git repository

and to use existing code that does not need to be extracted.

Depending on your particular scenario, the arguments and options

you use with devtool add form different

combinations.

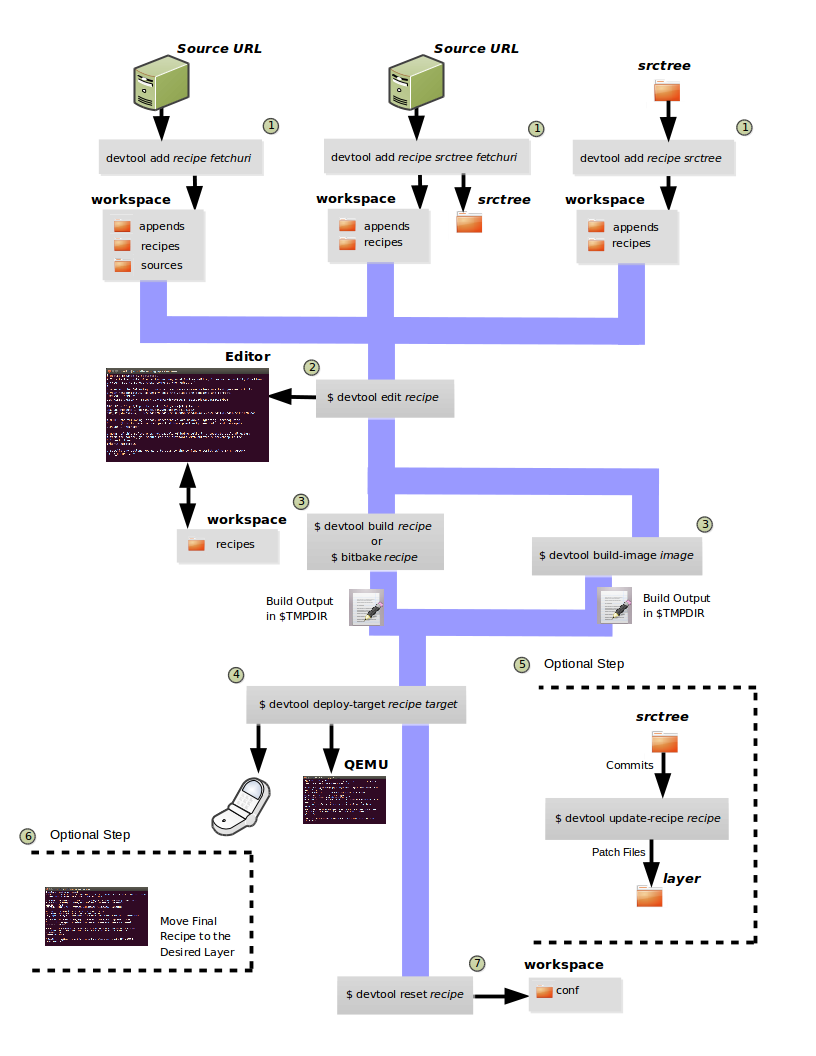

The following diagram shows common development flows

you would use with the devtool add

command:

Generating the New Recipe: The top part of the flow shows three scenarios by which you could use

devtool addto generate a recipe based on existing source code.In a shared development environment, it is typical where other developers are responsible for various areas of source code. As a developer, you are probably interested in using that source code as part of your development using the Yocto Project. All you need is access to the code, a recipe, and a controlled area in which to do your work.

Within the diagram, three possible scenarios feed into the

devtool addworkflow:Left: The left scenario represents a common situation where the source code does not exist locally and needs to be extracted. In this situation, you just let it get extracted to the default workspace - you do not want it in some specific location outside of the workspace. Thus, everything you need will be located in the workspace:

$ devtool addrecipe fetchuriWith this command,

devtoolcreates a recipe and an append file in the workspace as well as extracts the upstream source files into a local Git repository also within thesourcesfolder.Middle: The middle scenario also represents a situation where the source code does not exist locally. In this case, the code is again upstream and needs to be extracted to some local area - this time outside of the default workspace. As always, if required

devtoolcreates a Git repository locally during the extraction. Furthermore, the first positional argumentsrctreein this case identifies where thedevtool addcommand will locate the extracted code outside of the workspace:$ devtool addrecipe srctree fetchuriIn summary, the source code is pulled from

fetchuriand extracted into the location defined bysrctreeas a local Git repository.Within workspace,

devtoolcreates both the recipe and an append file for the recipe.Right: The right scenario represents a situation where the source tree (srctree) has been previously prepared outside of the

devtoolworkspace.The following command names the recipe and identifies where the existing source tree is located:

$ devtool addrecipe srctreeThe command examines the source code and creates a recipe for it placing the recipe into the workspace.

Because the extracted source code already exists,

devtooldoes not try to relocate it into the workspace - just the new the recipe is placed in the workspace.Aside from a recipe folder, the command also creates an append folder and places an initial

*.bbappendwithin.

Edit the Recipe: At this point, you can use

devtool edit-recipeto open up the editor as defined by the$EDITORenvironment variable and modify the file:$ devtool edit-reciperecipeFrom within the editor, you can make modifications to the recipe that take affect when you build it later.

Build the Recipe or Rebuild the Image: At this point in the flow, the next step you take depends on what you are going to do with the new code.

If you need to take the build output and eventually move it to the target hardware, you would use

devtool build:$ devtool buildrecipeOn the other hand, if you want an image to contain the recipe's packages for immediate deployment onto a device (e.g. for testing purposes), you can use the

devtool build-imagecommand:$ devtool build-imageimageDeploy the Build Output: When you use the

devtool buildcommand to build out your recipe, you probably want to see if the resulting build output works as expected on target hardware.Note

This step assumes you have a previously built image that is already either running in QEMU or running on actual hardware. Also, it is assumed that for deployment of the image to the target, SSH is installed in the image and if the image is running on real hardware that you have network access to and from your development machine.You can deploy your build output to that target hardware by using the

devtool deploy-targetcommand:$ devtool deploy-targetrecipe targetThe

targetis a live target machine running as an SSH server.You can, of course, also deploy the image you build using the

devtool build-imagecommand to actual hardware. However,devtooldoes not provide a specific command that allows you to do this.Optionally Update the Recipe With Patch Files: Once you are satisfied with the recipe, if you have made any changes to the source tree that you want to have applied by the recipe, you need to generate patches from those changes. You do this before moving the recipe to its final layer and cleaning up the workspace area

devtooluses. This optional step is especially relevant if you are using or adding third-party software.To convert commits created using Git to patch files, use the

devtool update-recipecommand.Note

Any changes you want to turn into patches must be committed to the Git repository in the source tree.$ devtool update-reciperecipeMove the Recipe to its Permanent Layer: Before cleaning up the workspace, you need to move the final recipe to its permanent layer. You must do this before using the

devtool resetcommand if you want to retain the recipe.Reset the Recipe: As a final step, you can restore the state such that standard layers and the upstream source is used to build the recipe rather than data in the workspace. To reset the recipe, use the

devtool resetcommand:$ devtool resetrecipe

3.2.2. Use devtool modify to Modify the Source of an Existing Component¶

The devtool modify command prepares the

way to work on existing code that already has a recipe in

place.

The command is flexible enough to allow you to extract code,

specify the existing recipe, and keep track of and gather any

patch files from other developers that are

associated with the code.

Depending on your particular scenario, the arguments and options

you use with devtool modify form different

combinations.

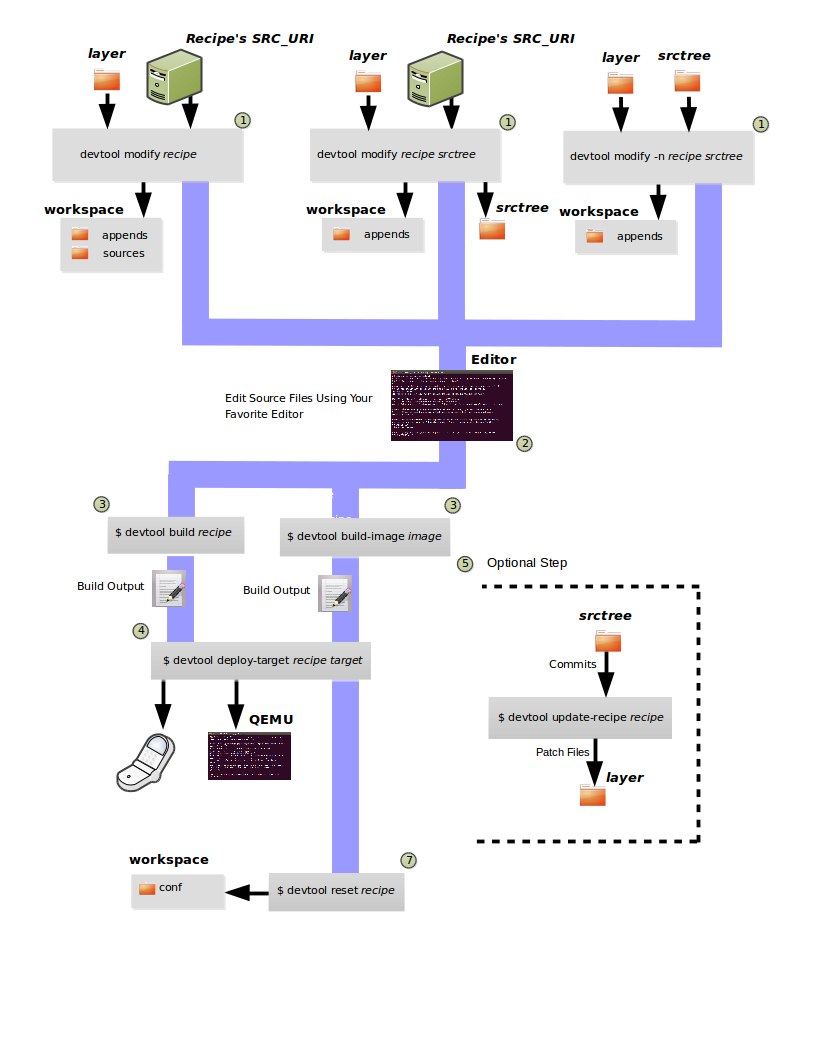

The following diagram shows common development flows

you would use with the devtool modify

command:

Preparing to Modify the Code: The top part of the flow shows three scenarios by which you could use

devtool modifyto prepare to work on source files. Each scenario assumes the following:The recipe exists in some layer external to the

devtoolworkspace.The source files exist upstream in an un-extracted state or locally in a previously extracted state.

The typical situation is where another developer has created some layer for use with the Yocto Project and their recipe already resides in that layer. Furthermore, their source code is readily available either upstream or locally.

Left: The left scenario represents a common situation where the source code does not exist locally and needs to be extracted. In this situation, the source is extracted into the default workspace location. The recipe, in this scenario, is in its own layer outside the workspace (i.e.

meta-layername).The following command identifies the recipe and by default extracts the source files:

$ devtool modifyrecipeOnce

devtoollocates the recipe, it uses theSRC_URIvariable to locate the source code and any local patch files from other developers are located.Note

You cannot provide an URL forsrctreewhen using thedevtool modifycommand.With this scenario, however, since no

srctreeargument exists, thedevtool modifycommand by default extracts the source files to a Git structure. Furthermore, the location for the extracted source is the default area within the workspace. The result is that the command sets up both the source code and an append file within the workspace with the recipe remaining in its original location.Middle: The middle scenario represents a situation where the source code also does not exist locally. In this case, the code is again upstream and needs to be extracted to some local area as a Git repository. The recipe, in this scenario, is again in its own layer outside the workspace.

The following command tells

devtoolwhat recipe with which to work and, in this case, identifies a local area for the extracted source files that is outside of the default workspace:$ devtool modifyrecipe srctreeAs with all extractions, the command uses the recipe's

SRC_URIto locate the source files. Once the files are located, the command by default extracts them. Providing thesrctreeargument instructsdevtoolwhere place the extracted source.Within workspace,

devtoolcreates an append file for the recipe. The recipe remains in its original location but the source files are extracted to the location you provided withsrctree.Right: The right scenario represents a situation where the source tree (

srctree) exists as a previously extracted Git structure outside of thedevtoolworkspace. In this example, the recipe also exists elsewhere in its own layer.The following command tells

devtoolthe recipe with which to work, uses the "-n" option to indicate source does not need to be extracted, and usessrctreeto point to the previously extracted source files:$ devtool modify -nrecipe srctreeOnce the command finishes, it creates only an append file for the recipe in the workspace. The recipe and the source code remain in their original locations.

Edit the Source: Once you have used the

devtool modifycommand, you are free to make changes to the source files. You can use any editor you like to make and save your source code modifications.Build the Recipe: Once you have updated the source files, you can build the recipe.

Deploy the Build Output: When you use the

devtool buildcommand to build out your recipe, you probably want to see if the resulting build output works as expected on target hardware.Note

This step assumes you have a previously built image that is already either running in QEMU or running on actual hardware. Also, it is assumed that for deployment of the image to the target, SSH is installed in the image and if the image is running on real hardware that you have network access to and from your development machine.You can deploy your build output to that target hardware by using the

devtool deploy-targetcommand:$ devtool deploy-targetrecipe targetThe

targetis a live target machine running as an SSH server.You can, of course, also deploy the image you build using the

devtool build-imagecommand to actual hardware. However,devtooldoes not provide a specific command that allows you to do this.Optionally Create Patch Files for Your Changes: After you have debugged your changes, you can use

devtool update-recipeto generate patch files for all the commits you have made.Note

Patch files are generated only for changes you have committed.$ devtool update-reciperecipeBy default, the

devtool update-recipecommand creates the patch files in a folder named the same as the recipe beneath the folder in which the recipe resides, and updates the recipe'sSRC_URIstatement to point to the generated patch files.Note

You can use the "--appendLAYERDIR" option to cause the command to create append files in a specific layer rather than the default recipe layer.Restore the Workspace: The

devtool resetrestores the state so that standard layers and upstream sources are used to build the recipe rather than what is in the workspace.$ devtool resetrecipe

3.3. A Closer Look at devtool add¶

The devtool add command automatically creates a

recipe based on the source tree with which you provide it.

Currently, the command has support for the following:

Autotools (

autoconfandautomake)CMake

Scons

qmakePlain

MakefileOut-of-tree kernel module

Binary package (i.e. "-b" option)

Node.js module through

npmPython modules that use

setuptoolsordistutils

Apart from binary packages, the determination of how a source tree

should be treated is automatic based on the files present within

that source tree.

For example, if a CMakeLists.txt file is found,

then the source tree is assumed to be using

CMake and is treated accordingly.

Note

In most cases, you need to edit the automatically generated recipe in order to make it build properly. Typically, you would go through several edit and build cycles until you can build the recipe. Once the recipe can be built, you could use possible further iterations to test the recipe on the target device.

The remainder of this section covers specifics regarding how parts of the recipe are generated.

3.3.1. Name and Version¶

If you do not specify a name and version on the command

line, devtool add attempts to determine

the name and version of the software being built from

various metadata within the source tree.

Furthermore, the command sets the name of the created recipe

file accordingly.

If the name or version cannot be determined, the

devtool add command prints an error and

you must re-run the command with both the name and version

or just the name or version specified.

Sometimes the name or version determined from the source tree might be incorrect. For such a case, you must reset the recipe:

$ devtool reset -n recipename

After running the devtool reset command,

you need to run devtool add again and

provide the name or the version.

3.3.2. Dependency Detection and Mapping¶

The devtool add command attempts to

detect build-time dependencies and map them to other recipes

in the system.

During this mapping, the command fills in the names of those

recipes in the

DEPENDS

value within the recipe.

If a dependency cannot be mapped, then a comment is placed in

the recipe indicating such.

The inability to map a dependency might be caused because the

naming is not recognized or because the dependency simply is

not available.

For cases where the dependency is not available, you must use

the devtool add command to add an

additional recipe to satisfy the dependency and then come

back to the first recipe and add its name to

DEPENDS.

If you need to add runtime dependencies, you can do so by adding the following to your recipe:

RDEPENDS_${PN} += "dependency1 dependency2 ..."

Note

Thedevtool add command often cannot

distinguish between mandatory and optional dependencies.

Consequently, some of the detected dependencies might

in fact be optional.

When in doubt, consult the documentation or the configure

script for the software the recipe is building for further

details.

In some cases, you might find you can substitute the

dependency for an option to disable the associated

functionality passed to the configure script.

3.3.3. License Detection¶

The devtool add command attempts to

determine if the software you are adding is able to be

distributed under a common open-source license and sets the

LICENSE

value accordingly.

You should double-check this value against the documentation

or source files for the software you are building and update

that LICENSE value if necessary.

The devtool add command also sets the

LIC_FILES_CHKSUM

value to point to all files that appear to be license-related.

However, license statements often appear in comments at the top

of source files or within documentation.

Consequently, you might need to amend the

LIC_FILES_CHKSUM variable to point to one

or more of those comments if present.

Setting LIC_FILES_CHKSUM is particularly

important for third-party software.

The mechanism attempts to ensure correct licensing should you

upgrade the recipe to a newer upstream version in future.

Any change in licensing is detected and you receive an error

prompting you to check the license text again.

If the devtool add command cannot

determine licensing information, the

LICENSE value is set to "CLOSED" and the

LIC_FILES_CHKSUM vaule remains unset.

This behavior allows you to continue with development but is

unlikely to be correct in all cases.

Consequently, you should check the documentation or source

files for the software you are building to determine the actual

license.

3.3.4. Adding Makefile-Only Software¶

The use of make by itself is very common

in both proprietary and open source software.

Unfortunately, Makefiles are often not written with

cross-compilation in mind.

Thus, devtool add often cannot do very

much to ensure that these Makefiles build correctly.

It is very common, for example, to explicitly call

gcc instead of using the

CC variable.

Usually, in a cross-compilation environment,

gcc is the compiler for the build host

and the cross-compiler is named something similar to

arm-poky-linux-gnueabi-gcc and might

require some arguments (e.g. to point to the associated sysroot

for the target machine).

When writing a recipe for Makefile-only software, keep the following in mind:

You probably need to patch the Makefile to use variables instead of hardcoding tools within the toolchain such as

gccandg++.The environment in which

makeruns is set up with various standard variables for compilation (e.g.CC,CXX, and so forth) in a similar manner to the environment set up by the SDK's environment setup script. One easy way to see these variables is to run thedevtool buildcommand on the recipe and then look inoe-logs/run.do_compile. Towards the top of this file you will see a list of environment variables that are being set. You can take advantage of these variables within the Makefile.If the Makefile sets a default for a variable using "=", that default overrides the value set in the environment, which is usually not desirable. In this situation, you can either patch the Makefile so it sets the default using the "?=" operator, or you can alternatively force the value on the

makecommand line. To force the value on the command line, add the variable setting toEXTRA_OEMAKEwithin the recipe as follows:EXTRA_OEMAKE += "'CC=${CC}' 'CXX=${CXX}'"In the above example, single quotes are used around the variable settings as the values are likely to contain spaces because required default options are passed to the compiler.

Hardcoding paths inside Makefiles is often problematic in a cross-compilation environment. This is particularly true because those hardcoded paths often point to locations on the build host and thus will either be read-only or will introduce contamination into the cross-compilation by virtue of being specific to the build host rather than the target. Patching the Makefile to use prefix variables or other path variables is usually the way to handle this.

Sometimes a Makefile runs target-specific commands such as

ldconfig. For such cases, you might be able to simply apply patches that remove these commands from the Makefile.

3.3.5. Adding Native Tools¶

Often, you need to build additional tools that run on the

build host system as opposed to the target.

You should indicate this using one of the following methods

when you run devtool add:

Specify the name of the recipe such that it ends with "-native". Specifying the name like this produces a recipe that only builds for the build host.

Specify the "‐‐also-native" option with the

devtool addcommand. Specifying this option creates a recipe file that still builds for the target but also creates a variant with a "-native" suffix that builds for the build host.

Note

If you need to add a tool that is shipped as part of a source tree that builds code for the target, you can typically accomplish this by building the native and target parts separately rather than within the same compilation process. Realize though that with the "‐‐also-native" option, you can add the tool using just one recipe file.

3.3.6. Adding Node.js Modules¶

You can use the devtool add command in the

following form to add Node.js modules:

$ devtool add "npm://registry.npmjs.org;name=forever;version=0.15.1"

The name and version parameters are mandatory. Lockdown and shrinkwrap files are generated and pointed to by the recipe in order to freeze the version that is fetched for the dependencies according to the first time. This also saves checksums that are verified on future fetches. Together, these behaviors ensure the reproducibility and integrity of the build.

Notes

You must use quotes around the URL. The

devtool adddoes not require the quotes, but the shell considers ";" as a splitter between multiple commands. Thus, without the quotes,devtool adddoes not receive the other parts, which results in several "command not found" errors.In order to support adding Node.js modules, a

nodejsrecipe must be part of your SDK in order to provide Node.js itself.

3.4. Working With Recipes¶

When building a recipe with devtool build the

typical build progression is as follows:

Fetch the source

Unpack the source

Configure the source

Compiling the source

Install the build output

Package the installed output

For recipes in the workspace, fetching and unpacking is disabled as the source tree has already been prepared and is persistent. Each of these build steps is defined as a function, usually with a "do_" prefix. These functions are typically shell scripts but can instead be written in Python.

If you look at the contents of a recipe, you will see that the