Yocto Project Overview and Concepts Manual

Copyright © 2010-2018 Linux Foundation

Permission is granted to copy, distribute and/or modify this document under the terms of the Creative Commons Attribution-Share Alike 2.0 UK: England & Wales as published by Creative Commons.

Manual Notes

This version of the Yocto Project Overview and Concepts Manual is for the 2.5.1 release of the Yocto Project. To be sure you have the latest version of the manual for this release, go to the Yocto Project documentation page and select the manual from that site. Manuals from the site are more up-to-date than manuals derived from the Yocto Project released TAR files.

If you located this manual through a web search, the version of the manual might not be the one you want (e.g. the search might have returned a manual much older than the Yocto Project version with which you are working). You can see all Yocto Project major releases by visiting the Releases page. If you need a version of this manual for a different Yocto Project release, visit the Yocto Project documentation page and select the manual set by using the "ACTIVE RELEASES DOCUMENTATION" or "DOCUMENTS ARCHIVE" pull-down menus.

To report any inaccuracies or problems with this manual, send an email to the Yocto Project discussion group at

yocto@yoctoproject.comor log into the freenode#yoctochannel.

| Revision History | |

|---|---|

| Revision 2.5 | May 2018 |

| The initial document released with the Yocto Project 2.5 Release. | |

| Revision 2.5.1 | September 2018 |

| The initial document released with the Yocto Project 2.5.1 Release. | |

Table of Contents

- 1. The Yocto Project Overview and Concepts Manual

- 2. Introducing the Yocto Project

- 3. The Yocto Project Development Environment

- 4. Yocto Project Concepts

Chapter 1. The Yocto Project Overview and Concepts Manual¶

Table of Contents

1.1. Welcome¶

Welcome to the Yocto Project Overview and Concepts Manual! This manual introduces the Yocto Project by providing concepts, software overviews, best-known-methods (BKMs), and any other high-level introductory information suitable for a new Yocto Project user.

The following list describes what you can get from this manual:

Introducing the Yocto Project: This chapter provides an introduction to the Yocto Project. You will learn about features and challenges of the Yocto Project, the layer model, components and tools, development methods, the Poky reference distribution, the OpenEmbedded build system workflow, and some basic Yocto terms.

The Yocto Project Development Environment: This chapter helps you get started understanding the Yocto Project development environment. You will learn about open source, development hosts, Yocto Project source repositories, workflows using Git and the Yocto Project, a Git primer, and information about licensing.

Yocto Project Concepts: This chapter presents various concepts regarding the Yocto Project. You can find conceptual information about components, development, cross-toolchains, and so forth.

This manual does not give you the following:

Step-by-step Instructions for Development Tasks: Instructional procedures reside in other manuals within the Yocto Project documentation set. For example, the Yocto Project Development Tasks Manual provides examples on how to perform various development tasks. As another example, the Yocto Project Application Development and the Extensible Software Development Kit (eSDK) manual contains detailed instructions on how to install an SDK, which is used to develop applications for target hardware.

Reference Material: This type of material resides in an appropriate reference manual. For example, system variables are documented in the Yocto Project Reference Manual. As another example, the Yocto Project Board Support Package (BSP) Developer's Guide contains reference information on BSPs.

Detailed Public Information Not Specific to the Yocto Project: For example, exhaustive information on how to use the Source Control Manager Git is better covered with Internet searches and official Git Documentation than through the Yocto Project documentation.

1.2. Other Information¶

Because this manual presents information for many different topics, supplemental information is recommended for full comprehension. For additional introductory information on the Yocto Project, see the Yocto Project Website. If you want to build an image with no knowledge of Yocto Project as a way of quickly testing it out, see the Yocto Project Quick Build document. For a comprehensive list of links and other documentation, see the "Links and Related Documentation" section in the Yocto Project Reference Manual.

Chapter 2. Introducing the Yocto Project¶

Table of Contents

2.1. What is the Yocto Project?¶

The Yocto Project is an open source collaboration project that helps developers create custom Linux-based systems that are designed for embedded products regardless of the product's hardware architecture. Yocto Project provides a flexible toolset and a development environment that allows embedded device developers across the world to collaborate through shared technologies, software stacks, configurations, and best practices used to create these tailored Linux images.

Thousands of developers worldwide have discovered that Yocto Project provides advantages in both systems and applications development, archival and management benefits, and customizations used for speed, footprint, and memory utilization. The project is a standard when it comes to delivering embedded software stacks. The project allows software customizations and build interchange for multiple hardware platforms as well as software stacks that can be maintained and scaled.

|

For further introductory information on the Yocto Project, you might be interested in this article by Drew Moseley and in this short introductory video.

The remainder of this section overviews advantages and challenges tied to the Yocto Project.

2.1.1. Features¶

The following list describes features and advantages of the Yocto Project:

Widely Adopted Across the Industry: Semiconductor, operating system, software, and service vendors exist whose products and services adopt and support the Yocto Project. For a look at the Yocto Project community and the companies involved with the Yocto Project, see the "COMMUNITY" and "ECOSYSTEM" tabs on the Yocto Project home page.

Architecture Agnostic: Yocto Project supports Intel, ARM, MIPS, AMD, PPC and other architectures. Most ODMs, OSVs, and chip vendors create and supply BSPs that support their hardware. If you have custom silicon, you can create a BSP that supports that architecture.

Aside from lots of architecture support, the Yocto Project fully supports a wide range of device emulation through the Quick EMUlator (QEMU).

Images and Code Transfer Easily: Yocto Project output can easily move between architectures without moving to new development environments. Additionally, if you have used the Yocto Project to create an image or application and you find yourself not able to support it, commercial Linux vendors such as Wind River, Mentor Graphics, Timesys, and ENEA could take it and provide ongoing support. These vendors have offerings that are built using the Yocto Project.

Flexibility: Corporations use the Yocto Project many different ways. One example is to create an internal Linux distribution as a code base the corporation can use across multiple product groups. Through customization and layering, a project group can leverage the base Linux distribution to create a distribution that works for their product needs.

Ideal for Constrained Embedded and IoT devices: Unlike a full Linux distribution, you can use the Yocto Project to create exactly what you need for embedded devices. You only add the feature support or packages that you absolutely need for the device. For devices that have display hardware, you can use available system components such as X11, GTK+, Qt, Clutter, and SDL (among others) to create a rich user experience. For devices that do not have a display or where you want to use alternative UI frameworks, you can choose to not install these components.

Comprehensive Toolchain Capabilities: Toolchains for supported architectures satisfy most use cases. However, if your hardware supports features that are not part of a standard toolchain, you can easily customize that toolchain through specification of platform-specific tuning parameters. And, should you need to use a third-party toolchain, mechanisms built into the Yocto Project allow for that.

Mechanism Rules Over Policy: Focusing on mechanism rather than policy ensures that you are free to set policies based on the needs of your design instead of adopting decisions enforced by some system software provider.

Uses a Layer Model: The Yocto Project layer infrastructure groups related functionality into separate bundles. You can incrementally add these grouped functionalities to your project as needed. Using layers to isolate and group functionality reduces project complexity and redundancy, allows you to easily extend the system, make customizations, and keep functionality organized.

Supports Partial Builds: You can build and rebuild individual packages as needed. Yocto Project accomplishes this through its shared-state cache (sstate) scheme. Being able to build and debug components individually eases project development.

Releases According to a Strict Schedule: Major releases occur on a six-month cycle predictably in October and April. The most recent two releases support point releases to address common vulnerabilities and exposures. This predictability is crucial for projects based on the Yocto Project and allows development teams to plan activities.

Rich Ecosystem of Individuals and Organizations: For open source projects, the value of community is very important. Support forums, expertise, and active developers who continue to push the Yocto Project forward are readily available.

Binary Reproducibility: The Yocto Project allows you to be very specific about dependencies and achieves very high percentages of binary reproducibility (e.g. 99.8% for

core-image-minimal). When distributions are not specific about which packages are pulled in and in what order to support dependencies, other build systems can arbitrarily include packages.License Manifest: The Yocto Project provides a license manifest for review by people who need to track the use of open source licenses (e.g.legal teams).

2.1.2. Challenges¶

The following list presents challenges you might encounter when developing using the Yocto Project:

Steep Learning Curve: The Yocto Project has a steep learning curve and has many different ways to accomplish similar tasks. It can be difficult to choose how to proceed when varying methods exist by which to accomplish a given task.

Understanding What Changes You Need to Make For Your Design Requires Some Research: Beyond the simple tutorial stage, understanding what changes need to be made for your particular design can require a significant amount of research and investigation. For information that helps you transition from trying out the Yocto Project to using it for your project, see the "What I wish I'd Known" and "Transitioning to a Custom Environment for Systems Development" documents on the Yocto Project website.

Project Workflow Could Be Confusing: The Yocto Project workflow could be confusing if you are used to traditional desktop and server software development. In a desktop development environment, mechanisms exist to easily pull and install new packages, which are typically pre-compiled binaries from servers accessible over the Internet. Using the Yocto Project, you must modify your configuration and rebuild to add additional packages.

Working in a Cross-Build Environment Can Feel Unfamiliar: When developing code to run on a target, compilation, execution, and testing done on the actual target can be faster than running a BitBake build on a development host and then deploying binaries to the target for test. While the Yocto Project does support development tools on the target, the additional step of integrating your changes back into the Yocto Project build environment would be required. Yocto Project supports an intermediate approach that involves making changes on the development system within the BitBake environment and then deploying only the updated packages to the target.

The Yocto Project OpenEmbedded build system produces packages in standard formats (i.e. RPM, DEB, IPK, and TAR). You can deploy these packages into the running system on the target by using utilities on the target such as

rpmoripk.Initial Build Times Can be Significant: Long initial build times are unfortunately unavoidable due to the large number of packages initially built from scratch for a fully functioning Linux system. Once that initial build is completed, however, the shared-state (sstate) cache mechanism Yocto Project uses keeps the system from rebuilding packages that have not been "touched" since the last build. The sstate mechanism significantly reduces times for successive builds.

2.2. The Yocto Project Layer Model¶

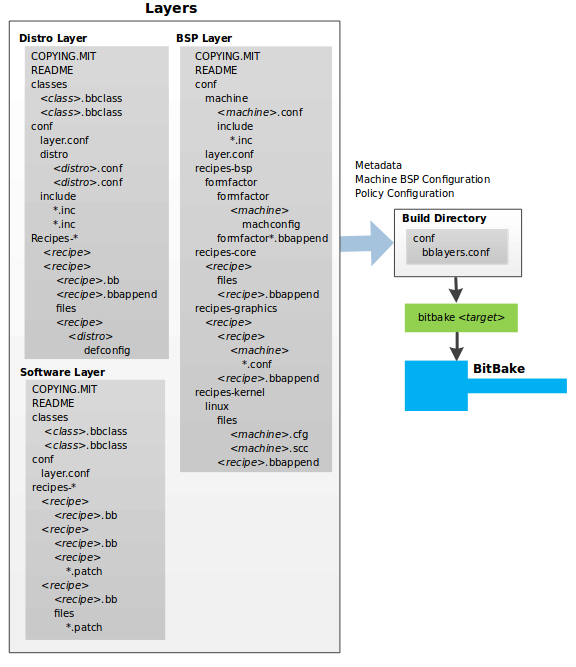

The Yocto Project's "Layer Model" is a development model for embedded and IoT Linux creation that distinguishes the Yocto Project from other simple build systems. The Layer Model simultaneously supports collaboration and customization. Layers are repositories that contain related sets of instructions that tell the OpenEmbedded build system what to do. You can collaborate, share, and reuse layers.

Layers can contain changes to previous instructions or settings at any time. This powerful override capability is what allows you to customize previously supplied collaborative or community layers to suit your product requirements.

You use different layers to logically separate information in your build. As an example, you could have BSP, GUI, distro configuration, middleware, or application layers. Putting your entire build into one layer limits and complicates future customization and reuse. Isolating information into layers, on the other hand, helps simplify future customizations and reuse. You might find it tempting to keep everything in one layer when working on a single project. However, the more modular your Metadata, the easier it is to cope with future changes.

Notes

Use Board Support Package (BSP) layers from silicon vendors when possible.

Familiarize yourself with the Yocto Project curated layer index or the OpenEmbedded layer index. The latter contains more layers but they are less universally validated.

Layers support the inclusion of technologies, hardware components, and software components. The Yocto Project Compatible designation provides a minimum level of standardization that contributes to a strong ecosystem. "YP Compatible" is applied to appropriate products and software components such as BSPs, other OE-compatible layers, and related open-source projects, allowing the producer to use Yocto Project badges and branding assets.

To illustrate how layers are used to keep things modular, consider

machine customizations.

These types of customizations typically reside in a special layer,

rather than a general layer, called a BSP Layer.

Furthermore, the machine customizations should be isolated from

recipes and Metadata that support a new GUI environment,

for example.

This situation gives you a couple of layers: one for the machine

configurations, and one for the GUI environment.

It is important to understand, however, that the BSP layer can

still make machine-specific additions to recipes within the GUI

environment layer without polluting the GUI layer itself

with those machine-specific changes.

You can accomplish this through a recipe that is a BitBake append

(.bbappend) file, which is described later

in this section.

Note

For general information on BSP layer structure, see the Yocto Project Board Support Packages (BSP) Developer's Guide.

The

Source Directory

contains both general layers and BSP layers right out of the box.

You can easily identify layers that ship with a Yocto Project

release in the Source Directory by their names.

Layers typically have names that begin with the string

meta-.

Note

It is not a requirement that a layer name begin with the prefixmeta-, but it is a commonly

accepted standard in the Yocto Project community.

For example, if you were to examine the

tree view

of the poky repository, you will see several

layers: meta,

meta-skeleton,

meta-selftest,

meta-poky, and

meta-yocto-bsp.

Each of these repositories represents a distinct layer.

For procedures on how to create layers, see the "Understanding and Creating Layers" section in the Yocto Project Development Tasks Manual.

2.3. Components and Tools¶

The Yocto Project employs a collection of components and tools used by the project itself, by project developers, and by those using the Yocto Project. These components and tools are open source projects and metadata that are separate from the reference distribution (Poky) and the OpenEmbedded build system. Most of the components and tools are downloaded separately.

This section provides brief overviews of the components and tools associated with the Yocto Project.

2.3.1. Development Tools¶

The following list consists of tools that help you develop images and applications using the Yocto Project:

CROPS: CROPS is an open source, cross-platform development framework that leverages Docker Containers. CROPS provides an easily managed, extensible environment that allows you to build binaries for a variety of architectures on Windows, Linux and Mac OS X hosts.

devtool: This command-line tool is available as part of the extensible SDK (eSDK) and is its cornerstone. You can usedevtoolto help build, test, and package software within the eSDK. You can use the tool to optionally integrate what you build into an image built by the OpenEmbedded build system.The

devtoolcommand employs a number of sub-commands that allow you to add, modify, and upgrade recipes. As with the OpenEmbedded build system, “recipes” represent software packages withindevtool. When you usedevtool add, a recipe is automatically created. When you usedevtool modify, the specified existing recipe is used in order to determine where to get the source code and how to patch it. In both cases, an environment is set up so that when you build the recipe a source tree that is under your control is used in order to allow you to make changes to the source as desired. By default, both new recipes and the source go into a “workspace” directory under the eSDK. Thedevtool upgradecommand updates an existing recipe so that you can build it for an updated set of source files.You can read about the

devtoolworkflow in the Yocto Project Application Development and Extensible Software Development Kit (eSDK) Manual in the "Usingdevtoolin Your SDK Workflow'" section.Extensible Software Development Kit (eSDK): The eSDK provides a cross-development toolchain and libraries tailored to the contents of a specific image. The eSDK makes it easy to add new applications and libraries to an image, modify the source for an existing component, test changes on the target hardware, and integrate into the rest of the OpenEmbedded build system. The eSDK gives you a toolchain experience supplemented with the powerful set of

devtoolcommands tailored for the Yocto Project environment.For information on the eSDK, see the Yocto Project Application Development and the Extensible Software Development Kit (eSDK) Manual.

Eclipse™ IDE Plug-in: This plug-in enables you to use the popular Eclipse Integrated Development Environment (IDE), which allows for development using the Yocto Project all within the Eclipse IDE. You can work within Eclipse to cross-compile, deploy, and execute your output into a QEMU emulation session as well as onto actual target hardware.

The environment also supports performance enhancing tools that allow you to perform remote profiling, tracing, collection of power data, collection of latency data, and collection of performance data.

Once you enable the plug-in, standard Eclipse functions automatically use the cross-toolchain and target system libraries. You can build applications using any of these libraries.

For more information on the Eclipse plug-in, see the "Working Within Eclipse" section in the Yocto Project Application Development and the Extensible Software Development Kit (eSDK) manual.

Toaster: Toaster is a web interface to the Yocto Project OpenEmbedded build system. Toaster allows you to configure, run, and view information about builds. For information on Toaster, see the Toaster User Manual.

2.3.2. Production Tools¶

The following list consists of tools that help production related activities using the Yocto Project:

Auto Upgrade Helper: This utility when used in conjunction with the OpenEmbedded build system (BitBake and OE-Core) automatically generates upgrades for recipes that are based on new versions of the recipes published upstream.

Recipe Reporting System: The Recipe Reporting System tracks recipe versions available for Yocto Project. The main purpose of the system is to help you manage the recipes you maintain and to offer a dynamic overview of the project. The Recipe Reporting System is built on top of the OpenEmbedded Layer Index, which is a website that indexes OpenEmbedded-Core layers.

Patchwork: Patchwork is a fork of a project originally started by OzLabs. The project is a web-based tracking system designed to streamline the process of bringing contributions into a project. The Yocto Project uses Patchwork as an organizational tool to handle patches, which number in the thousands for every release.

AutoBuilder: AutoBuilder is a project that automates build tests and quality assurance (QA). By using the public AutoBuilder, anyone can determine the status of the current "master" branch of Poky.

Note

AutoBuilder is based on buildbot.A goal of the Yocto Project is to lead the open source industry with a project that automates testing and QA procedures. In doing so, the project encourages a development community that publishes QA and test plans, publicly demonstrates QA and test plans, and encourages development of tools that automate and test and QA procedures for the benefit of the development community.

You can learn more about the AutoBuilder used by the Yocto Project here.

Cross-Prelink: Prelinking is the process of pre-computing the load addresses and link tables generated by the dynamic linker as compared to doing this at runtime. Doing this ahead of time results in performance improvements when the application is launched and reduced memory usage for libraries shared by many applications.

Historically, cross-prelink is a variant of prelink, which was conceived by Jakub Jelínek a number of years ago. Both prelink and cross-prelink are maintained in the same repository albeit on separate branches. By providing an emulated runtime dynamic linker (i.e.

glibc-derivedld.soemulation), the cross-prelink project extends the prelink software’s ability to prelink a sysroot environment. Additionally, the cross-prelink software enables the ability to work in sysroot style environments.The dynamic linker determines standard load address calculations based on a variety of factors such as mapping addresses, library usage, and library function conflicts. The prelink tool uses this information, from the dynamic linker, to determine unique load addresses for executable and linkable format (ELF) binaries that are shared libraries and dynamically linked. The prelink tool modifies these ELF binaries with the pre-computed information. The result is faster loading and often lower memory consumption because more of the library code can be re-used from shared Copy-On-Write (COW) pages.

The original upstream prelink project only supports running prelink on the end target device due to the reliance on the target device’s dynamic linker. This restriction causes issues when developing a cross-compiled system. The cross-prelink adds a synthesized dynamic loader that runs on the host, thus permitting cross-prelinking without ever having to run on a read-write target filesystem.

Pseudo: Pseudo is the Yocto Project implementation of fakeroot, which is used to run commands in an environment that seemingly has root privileges.

During a build, it can be necessary to perform operations that require system administrator privileges. For example, file ownership or permissions might need definition. Pseudo is a tool that you can either use directly or through the environment variable

LD_PRELOAD. Either method allows these operations to succeed as if system administrator privileges exist even when they do not.You can read more about Pseudo in the "Fakeroot and Pseudo" section.

2.3.3. Open-Embedded Build System Components¶

The following list consists of components associated with the OpenEmbedded build system:

BitBake: BitBake is a core component of the Yocto Project and is used by the OpenEmbedded build system to build images. While BitBake is key to the build system, BitBake is maintained separately from the Yocto Project.

BitBake is a generic task execution engine that allows shell and Python tasks to be run efficiently and in parallel while working within complex inter-task dependency constraints. In short, BitBake is a build engine that works through recipes written in a specific format in order to perform sets of tasks.

You can learn more about BitBake in the BitBake User Manual.

OpenEmbedded-Core: OpenEmbedded-Core (OE-Core) is a common layer of metadata (i.e. recipes, classes, and associated files) used by OpenEmbedded-derived systems, which includes the Yocto Project. The Yocto Project and the OpenEmbedded Project both maintain the OpenEmbedded-Core. You can find the OE-Core metadata in the Yocto Project Source Repositories.

Historically, the Yocto Project integrated the OE-Core metadata throughout the Yocto Project source repository reference system (Poky). After Yocto Project Version 1.0, the Yocto Project and OpenEmbedded agreed to work together and share a common core set of metadata (OE-Core), which contained much of the functionality previously found in Poky. This collaboration achieved a long-standing OpenEmbedded objective for having a more tightly controlled and quality-assured core. The results also fit well with the Yocto Project objective of achieving a smaller number of fully featured tools as compared to many different ones.

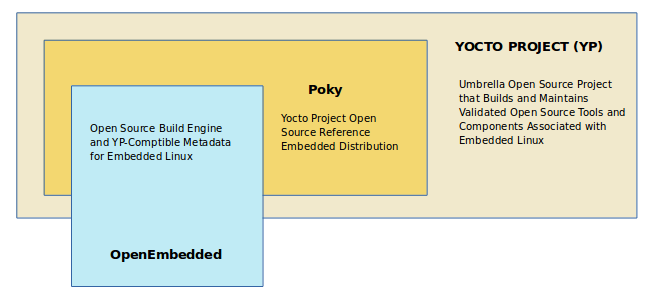

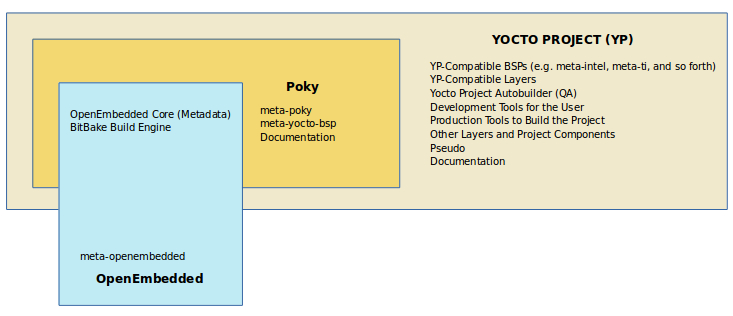

Sharing a core set of metadata results in Poky as an integration layer on top of OE-Core. You can see that in this figure. The Yocto Project combines various components such as BitBake, OE-Core, script “glue”, and documentation for its build system.

2.3.4. Reference Distribution (Poky)¶

Poky is the Yocto Project reference distribution. It contains the Open-Embedded build system (BitBake and OE-Core) as well as a set of metadata to get you started building your own distribution. See the figure in "What is the Yocto Project?" section for an illustration that shows Poky and its relationship with other parts of the Yocto Project.

To use the Yocto Project tools and components, you

can download (clone) Poky and use it

to bootstrap your own distribution.

Note

Poky does not contain binary files. It is a working example of how to build your own custom Linux distribution from source.You can read more about Poky in the "Reference Embedded Distribution (Poky)" section.

2.3.5. Packages for Finished Targets¶

The following lists components associated with packages for finished targets:

Matchbox: Matchbox is an Open Source, base environment for the X Window System running on non-desktop, embedded platforms such as handhelds, set-top boxes, kiosks, and anything else for which screen space, input mechanisms, or system resources are limited.

Matchbox consists of a number of interchangeable and optional applications that you can tailor to a specific, non-desktop platform to enhance usability in constrained environments.

You can find the Matchbox source in the Yocto Project Source Repositories.

Opkg Open PacKaGe management (opkg) is a lightweight package management system based on the itsy package (ipkg) management system. Opkg is written in C and resembles Advanced Package Tool (APT) and Debian Package (dpkg) in operation.

Opkg is intended for use on embedded Linux devices and is used in this capacity in the OpenEmbedded and OpenWrt projects, as well as the Yocto Project.

Note

As best it can, opkg maintains backwards compatibility with ipkg and conforms to a subset of Debian’s policy manual regarding control files.

2.3.6. Archived Components¶

The Build Appliance is a virtual machine image that enables you to build and boot a custom embedded Linux image with the Yocto Project using a non-Linux development system.

Historically, the Build Appliance was the second of three methods by which you could use the Yocto Project on a system that was not native to Linux.

Hob: Hob, which is now deprecated and is no longer available since the 2.1 release of the Yocto Project provided a rudimentary, GUI-based interface to the Yocto Project. Toaster has fully replaced Hob.

Build Appliance: Post Hob, the Build Appliance became available. It was never recommended that you use the Build Appliance as a day-to-day production development environment with the Yocto Project. Build Appliance was useful as a way to try out development in the Yocto Project environment.

CROPS: The final and best solution available now for developing using the Yocto Project on a system not native to Linux is with CROPS.

2.4. Development Methods¶

The Yocto Project development environment usually involves a Build Host and target hardware. You use the Build Host to build images and develop applications, while you use the target hardware to test deployed software.

This section provides an introduction to the choices or development methods you have when setting up your Build Host. Depending on the your particular workflow preference and the type of operating system your Build Host runs, several choices exist that allow you to use the Yocto Project.

Note

For additional detail about the Yocto Project development environment, see the "The Yocto Project Development Environment" chapter.

Native Linux Host: By far the best option for a Build Host. A system running Linux as its native operating system allows you to develop software by directly using the BitBake tool. You can accomplish all aspects of development from a familiar shell of a supported Linux distribution.

For information on how to set up a Build Host on a system running Linux as its native operating system, see the "Setting Up a Native Linux Host" section in the Yocto Project Development Tasks Manual.

CROss PlatformS (CROPS): Typically, you use CROPS, which leverages Docker Containers, to set up a Build Host that is not running Linux (e.g. Microsoft® Windows™ or macOS®).

Note

You can, however, use CROPS on a Linux-based system.CROPS is an open source, cross-platform development framework that provides an easily managed, extensible environment for building binaries targeted for a variety of architectures on Windows, macOS, or Linux hosts. Once the Build Host is set up using CROPS, you can prepare a shell environment to mimic that of a shell being used on a system natively running Linux.

For information on how to set up a Build Host with CROPS, see the "Setting Up to Use CROss PlatformS (CROPS)" section in the Yocto Project Development Tasks Manual.

Toaster: Regardless of what your Build Host is running, you can use Toaster to develop software using the Yocto Project. Toaster is a web interface to the Yocto Project's Open-Embedded build system. The interface enables you to configure and run your builds. Information about builds is collected and stored in a database. You can use Toaster to configure and start builds on multiple remote build servers.

For information about and how to use Toaster, see the Toaster User Manual.

Eclipse™ IDE: If your Build Host supports and runs the popular Eclipse IDE, you can install the Yocto Project Eclipse plug-in and use the Yocto Project to develop software. The plug-in integrates the Yocto Project functionality into Eclipse development practices.

For information about how to install and use the Yocto Project Eclipse plug-in, see the "Developing Applications Using Eclipse" chapter in the Yocto Project Application Development and the Extensible Software Development Kit (eSDK) Manual.

2.5. Reference Embedded Distribution (Poky)¶

"Poky", which is pronounced Pock-ee, is the name of the Yocto Project's reference distribution or Reference OS Kit. Poky contains the OpenEmbedded Build System (BitBake and OpenEmbedded-Core) as well as a set of metadata to get you started building your own distro. In other words, Poky is a base specification of the functionality needed for a typical embedded system as well as the components from the Yocto Project that allow you to build a distribution into a usable binary image.

Poky is a combined repository of BitBake, OpenEmbedded-Core

(which is found in meta),

meta-poky,

meta-yocto-bsp, and documentation provided

all together and known to work well together.

You can view these items that make up the Poky repository in the

Source Repositories.

Note

If you are interested in all the contents of thepoky Git repository, see the

"Top-Level Core Components"

section in the Yocto Project Reference Manual.

The following figure illustrates what generally comprises Poky:

|

BitBake is a task executor and scheduler that is the heart of the OpenEmbedded build system.

meta-poky, which is Poky-specific metadata.meta-yocto-bsp, which are Yocto Project-specific Board Support Packages (BSPs).OpenEmbedded-Core (OE-Core) metadata, which includes shared configurations, global variable definitions, shared classes, packaging, and recipes. Classes define the encapsulation and inheritance of build logic. Recipes are the logical units of software and images to be built.

Documentation, which contains the Yocto Project source files used to make the set of user manuals.

Note

While Poky is a "complete" distribution specification and is tested and put through QA, you cannot use it as a product "out of the box" in its current form.

To use the Yocto Project tools, you can use Git to clone (download) the Poky repository then use your local copy of the reference distribution to bootstrap your own distribution.

Note

Poky does not contain binary files. It is a working example of how to build your own custom Linux distribution from source.

Poky has a regular, well established, six-month release cycle under its own version. Major releases occur at the same time major releases (point releases) occur for the Yocto Project, which are typically in the Spring and Fall. For more information on the Yocto Project release schedule and cadence, see the "Yocto Project Releases and the Stable Release Process" chapter in the Yocto Project Reference Manual.

Much has been said about Poky being a "default configuration." A default configuration provides a starting image footprint. You can use Poky out of the box to create an image ranging from a shell-accessible minimal image all the way up to a Linux Standard Base-compliant image that uses a GNOME Mobile and Embedded (GMAE) based reference user interface called Sato.

One of the most powerful properties of Poky is that every aspect of a build is controlled by the metadata. You can use metadata to augment these base image types by adding metadata layers that extend functionality. These layers can provide, for example, an additional software stack for an image type, add a board support package (BSP) for additional hardware, or even create a new image type.

Metadata is loosely grouped into configuration files or package

recipes.

A recipe is a collection of non-executable metadata used by

BitBake to set variables or define additional build-time tasks.

A recipe contains fields such as the recipe description, the recipe

version, the license of the package and the upstream source

repository.

A recipe might also indicate that the build process uses autotools,

make, distutils or any other build process, in which case the basic

functionality can be defined by the classes it inherits from

the OE-Core layer's class definitions in

./meta/classes.

Within a recipe you can also define additional tasks as well as

task prerequisites.

Recipe syntax through BitBake also supports both

_prepend and _append

operators as a method of extending task functionality.

These operators inject code into the beginning or end of a task.

For information on these BitBake operators, see the

"Appending and Prepending (Override Style Syntax)"

section in the BitBake User's Manual.

2.6. The OpenEmbedded Build System Workflow¶

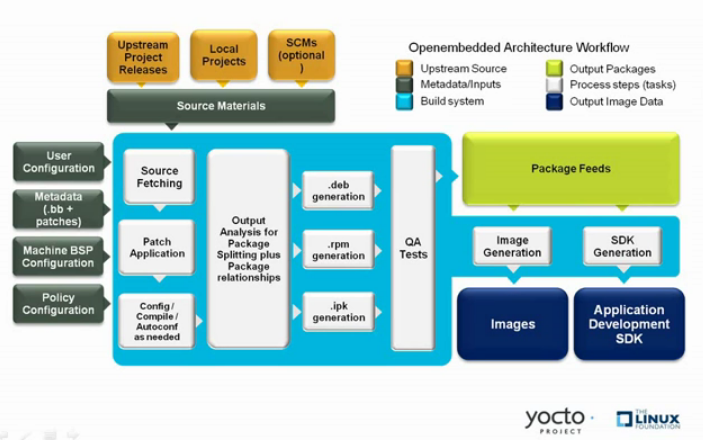

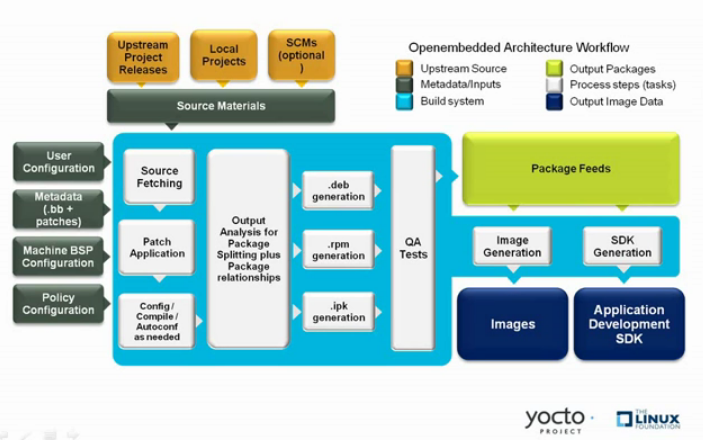

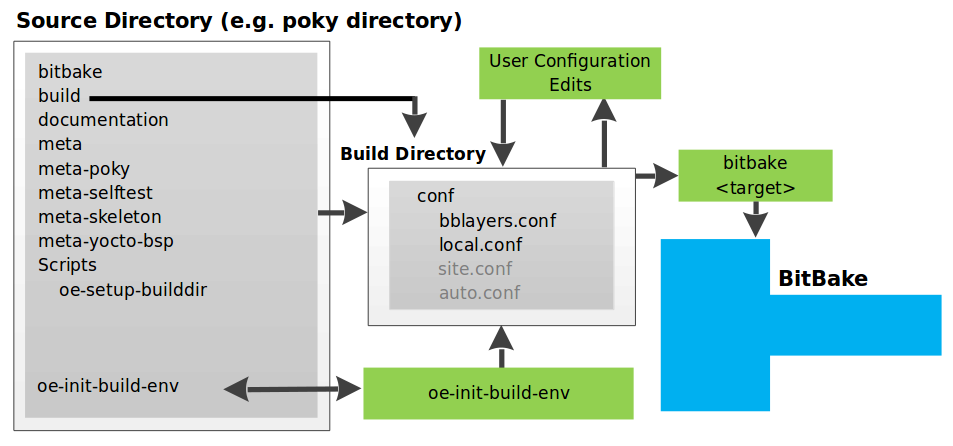

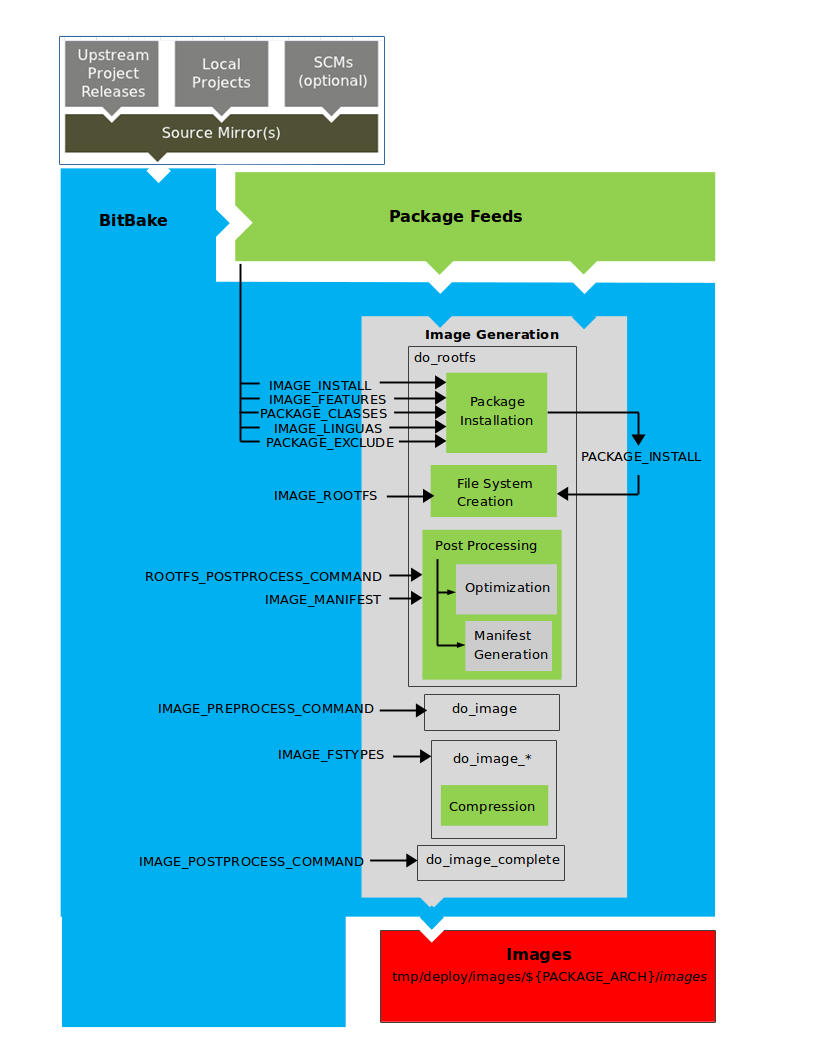

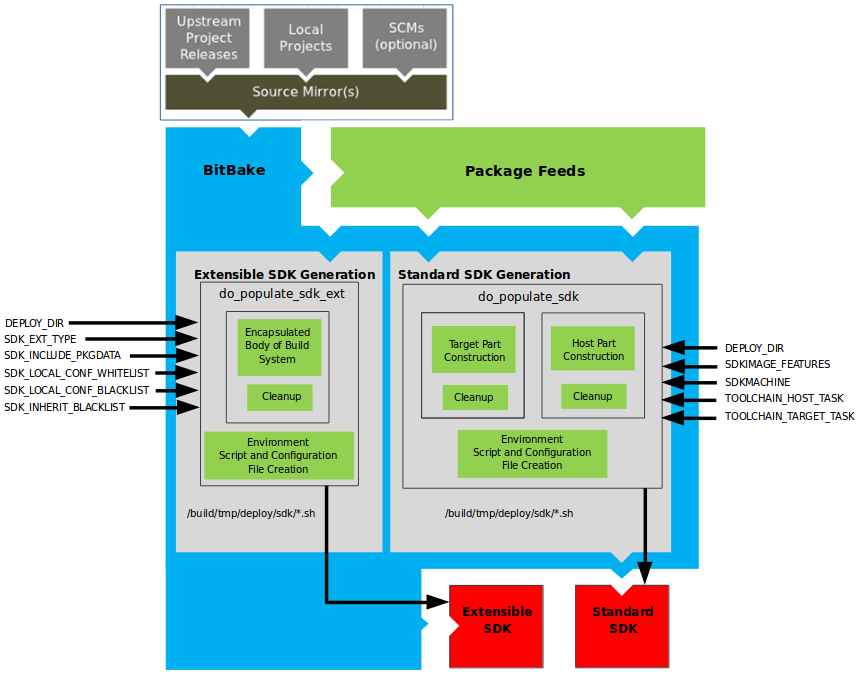

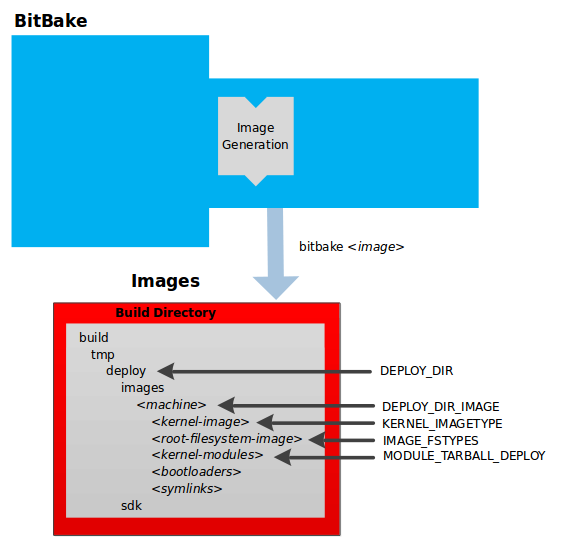

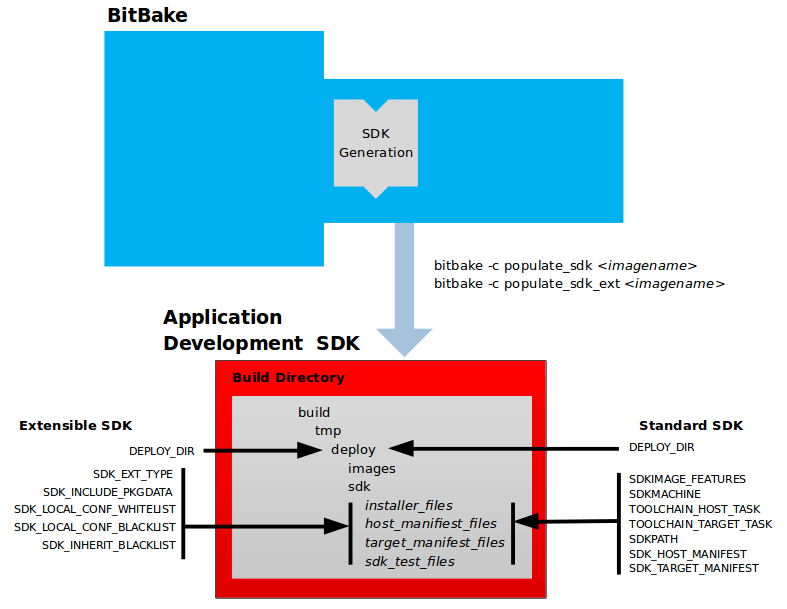

The OpenEmbedded build system uses a "workflow" to accomplish image and SDK generation. The following figure overviews that workflow:

|

Following is a brief summary of the "workflow":

Developers specify architecture, policies, patches and configuration details.

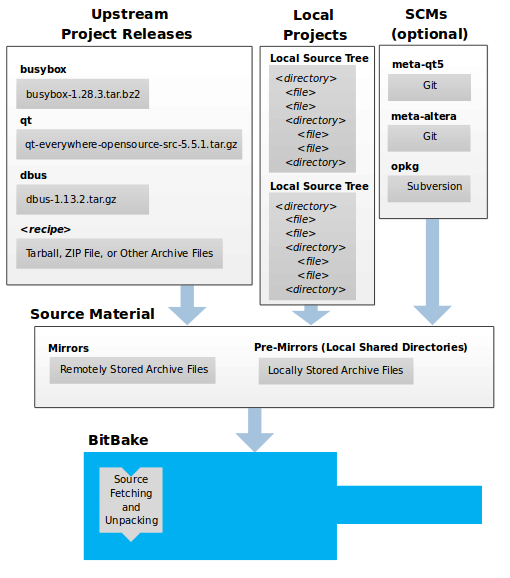

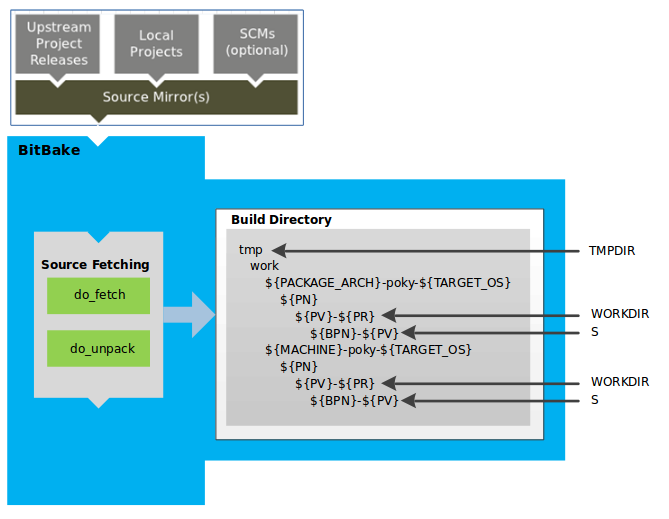

The build system fetches and downloads the source code from the specified location. The build system supports standard methods such as tarballs or source code repositories systems such as Git.

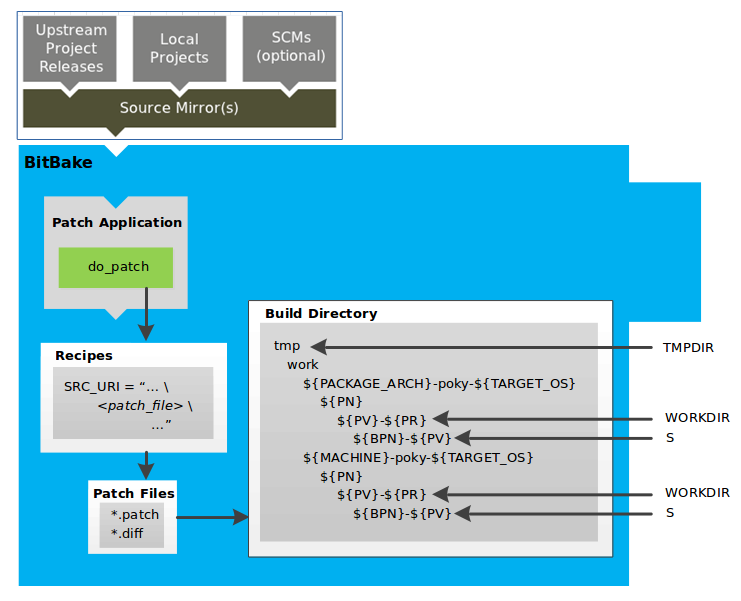

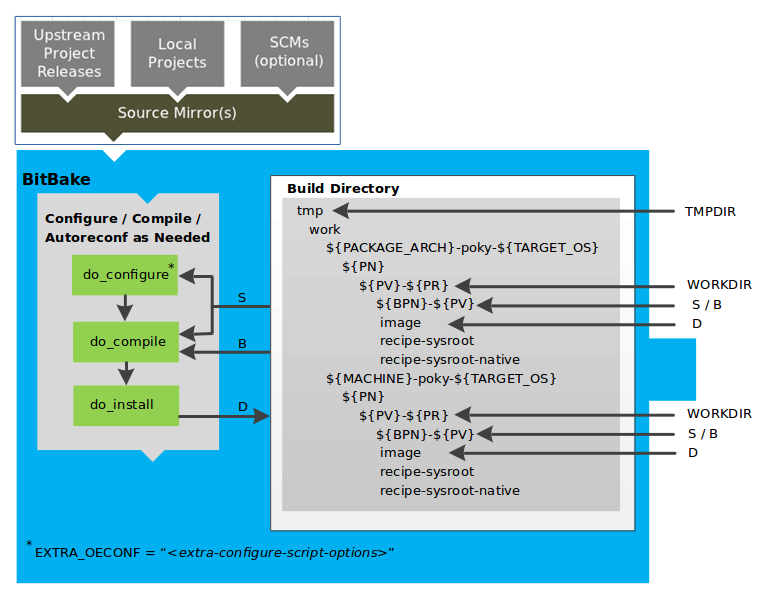

Once source code is downloaded, the build system extracts the sources into a local work area where patches are applied and common steps for configuring and compiling the software are run.

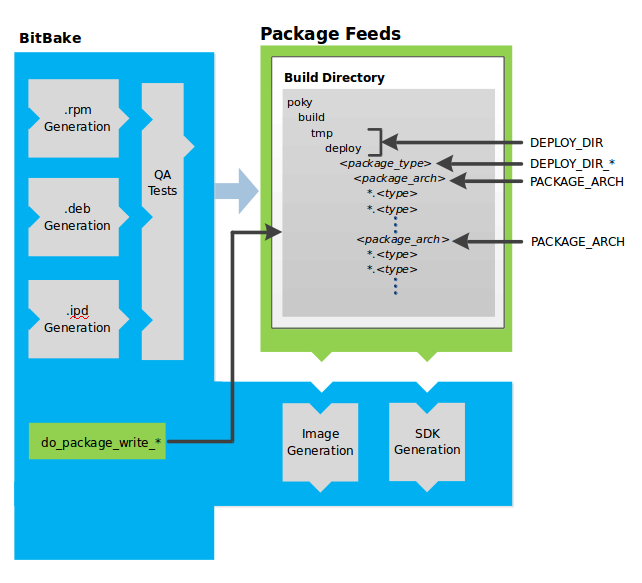

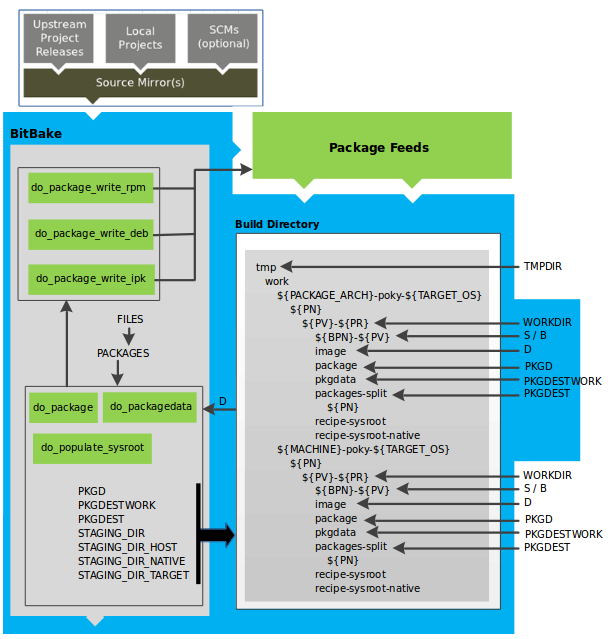

The build system then installs the software into a temporary staging area where the binary package format you select (DEB, RPM, or IPK) is used to roll up the software.

Different QA and sanity checks run throughout entire build process.

After the binaries are created, the build system generates a binary package feed that is used to create the final root file image.

The build system generates the file system image and a customized Extensible SDK (eSDSK) for application development in parallel.

For a very detailed look at this workflow, see the "OpenEmbedded Build System Concepts" section.

2.7. Some Basic Terms¶

It helps to understand some basic fundamental terms when learning the Yocto Project. Although a list of terms exists in the "Yocto Project Terms" section of the Yocto Project Reference Manual, this section provides the definitions of some terms helpful for getting started:

Configuration Files: Files that hold global definitions of variables, user-defined variables, and hardware configuration information. These files tell the Open-Embedded build system what to build and what to put into the image to support a particular platform.

Extensible Software Development Kit (eSDK): A custom SDK for application developers. This eSDK allows developers to incorporate their library and programming changes back into the image to make their code available to other application developers. For information on the eSDK, see the Yocto Project Application Development and the Extensible Software Development Kit (eSDK) manual.

Layer: A collection of related recipes. Layers allow you to consolidate related metadata to customize your build. Layers also isolate information used when building for multiple architectures. Layers are hierarchical in their ability to override previous specifications. You can include any number of available layers from the Yocto Project and customize the build by adding your layers after them. You can search the Layer Index for layers used within Yocto Project.

For more detailed information on layers, see the "Understanding and Creating Layers" section in the Yocto Project Development Tasks Manual. For a discussion specifically on BSP Layers, see the "BSP Layers" section in the Yocto Project Board Support Packages (BSP) Developer's Guide.

Metadata: A key element of the Yocto Project is the Metadata that is used to construct a Linux distribution and is contained in the files that the OpenEmbedded build system parses when building an image. In general, Metadata includes recipes, configuration files, and other information that refers to the build instructions themselves, as well as the data used to control what things get built and the effects of the build. Metadata also includes commands and data used to indicate what versions of software are used, from where they are obtained, and changes or additions to the software itself (patches or auxiliary files) that are used to fix bugs or customize the software for use in a particular situation. OpenEmbedded-Core is an important set of validated metadata.

OpenEmbedded Build System: The terms "BitBake" and "build system" are sometimes used for the OpenEmbedded Build System.

BitBake is a task scheduler and execution engine that parses instructions (i.e. recipes) and configuration data. After a parsing phase, BitBake creates a dependency tree to order the compilation, schedules the compilation of the included code, and finally executes the building of the specified custom Linux image (distribution). BitBake is similar to the

maketool.During a build process, the build system tracks dependencies and performs a native or cross-compilation of the package. As a first step in a cross-build setup, the framework attempts to create a cross-compiler toolchain (i.e. Extensible SDK) suited for the target platform.

OpenEmbedded-Core (OE-Core): OE-Core is metadata comprised of foundation recipes, classes, and associated files that are meant to be common among many different OpenEmbedded-derived systems, including the Yocto Project. OE-Core is a curated subset of an original repository developed by the OpenEmbedded community that has been pared down into a smaller, core set of continuously validated recipes. The result is a tightly controlled and quality-assured core set of recipes.

You can see the Metadata in the

metadirectory of the Yocto Project Source Repositories.Packages: In the context of the Yocto Project, this term refers to a recipe's packaged output produced by BitBake (i.e. a "baked recipe"). A package is generally the compiled binaries produced from the recipe's sources. You "bake" something by running it through BitBake.

It is worth noting that the term "package" can, in general, have subtle meanings. For example, the packages referred to in the "Required Packages for the Host Development System" section in the Yocto Project Reference Manual are compiled binaries that, when installed, add functionality to your Linux distribution.

Another point worth noting is that historically within the Yocto Project, recipes were referred to as packages - thus, the existence of several BitBake variables that are seemingly mis-named, (e.g.

PR,PV, andPE).Poky: Poky is a reference embedded distribution and a reference test configuration. Poky provides the following:

A base-level functional distro used to illustrate how to customize a distribution.

A means by which to test the Yocto Project components (i.e. Poky is used to validate the Yocto Project).

A vehicle through which you can download the Yocto Project.

Poky is not a product level distro. Rather, it is a good starting point for customization.

Note

Poky is an integration layer on top of OE-Core.Recipe: The most common form of metadata. A recipe contains a list of settings and tasks (i.e. instructions) for building packages that are then used to build the binary image. A recipe describes where you get source code and which patches to apply. Recipes describe dependencies for libraries or for other recipes as well as configuration and compilation options. Related recipes are consolidated into a layer.

Chapter 3. The Yocto Project Development Environment¶

Table of Contents

This chapter takes a look at the Yocto Project development environment. The chapter provides Yocto Project Development environment concepts that help you understand how work is accomplished in an open source environment, which is very different as compared to work accomplished in a closed, proprietary environment.

Specifically, this chapter addresses open source philosophy, source repositories, workflows, Git, and licensing.

3.1. Open Source Philosophy¶

Open source philosophy is characterized by software development directed by peer production and collaboration through an active community of developers. Contrast this to the more standard centralized development models used by commercial software companies where a finite set of developers produces a product for sale using a defined set of procedures that ultimately result in an end product whose architecture and source material are closed to the public.

Open source projects conceptually have differing concurrent agendas, approaches, and production. These facets of the development process can come from anyone in the public (community) who has a stake in the software project. The open source environment contains new copyright, licensing, domain, and consumer issues that differ from the more traditional development environment. In an open source environment, the end product, source material, and documentation are all available to the public at no cost.

A benchmark example of an open source project is the Linux kernel, which was initially conceived and created by Finnish computer science student Linus Torvalds in 1991. Conversely, a good example of a non-open source project is the Windows® family of operating systems developed by Microsoft® Corporation.

Wikipedia has a good historical description of the Open Source Philosophy here. You can also find helpful information on how to participate in the Linux Community here.

3.2. The Development Host¶

A development host or build host is key to using the Yocto Project. Because the goal of the Yocto Project is to develop images or applications that run on embedded hardware, development of those images and applications generally takes place on a system not intended to run the software - the development host.

You need to set up a development host in order to use it with the Yocto Project. Most find that it is best to have a native Linux machine function as the development host. However, it is possible to use a system that does not run Linux as its operating system as your development host. When you have a Mac or Windows-based system, you can set it up as the development host by using CROPS, which leverages Docker Containers. Once you take the steps to set up a CROPS machine, you effectively have access to a shell environment that is similar to what you see when using a Linux-based development host. For the steps needed to set up a system using CROPS, see the "Setting Up to Use CROss PlatformS (CROPS)" section in the Yocto Project Development Tasks Manual.

If your development host is going to be a system that runs a Linux distribution, steps still exist that you must take to prepare the system for use with the Yocto Project. You need to be sure that the Linux distribution on the system is one that supports the Yocto Project. You also need to be sure that the correct set of host packages are installed that allow development using the Yocto Project. For the steps needed to set up a development host that runs Linux, see the "Setting Up a Native Linux Host" section in the Yocto Project Development Tasks Manual.

Once your development host is set up to use the Yocto Project, several methods exist for you to do work in the Yocto Project environment:

Command Lines, BitBake, and Shells: Traditional development in the Yocto Project involves using the OpenEmbedded build system, which uses BitBake, in a command-line environment from a shell on your development host. You can accomplish this from a host that is a native Linux machine or from a host that has been set up with CROPS. Either way, you create, modify, and build images and applications all within a shell-based environment using components and tools available through your Linux distribution and the Yocto Project.

For a general flow of the build procedures, see the "Building a Simple Image" section in the Yocto Project Development Tasks Manual.

Board Support Package (BSP) Development: Development of BSPs involves using the Yocto Project to create and test layers that allow easy development of images and applications targeted for specific hardware. To development BSPs, you need to take some additional steps beyond what was described in setting up a development host.

The Yocto Project Board Support Package (BSP) Developer's Guide provides BSP-related development information. For specifics on development host preparation, see the "Preparing Your Build Host to Work With BSP Layers" section in the Yocto Project Board Support Package (BSP) Developer's Guide.

Kernel Development: If you are going to be developing kernels using the Yocto Project you likely will be using

devtool. A workflow usingdevtoolmakes kernel development quicker by reducing iteration cycle times.The Yocto Project Linux Kernel Development Manual provides kernel-related development information. For specifics on development host preparation, see the "Preparing the Build Host to Work on the Kernel" section in the Yocto Project Linux Kernel Development Manual.

Using the Eclipse™ IDE: One of two Yocto Project development methods that involves an interface that effectively puts the Yocto Project into the background is the popular Eclipse IDE. This method of development is advantageous if you are already familiar with working within Eclipse. Development is supported through a plugin that you install onto your development host.

For steps that show you how to set up your development host to use the Eclipse Yocto Project plugin, see the "Developing Applications Using Eclipse™" Chapter in the Yocto Project Application Development and the Extensible Software Development Kit (eSDK) manual.

Using Toaster: The other Yocto Project development method that involves an interface that effectively puts the Yocto Project into the background is Toaster. Toaster provides an interface to the OpenEmbedded build system. The interface enables you to configure and run your builds. Information about builds is collected and stored in a database. You can use Toaster to configure and start builds on multiple remote build servers.

For steps that show you how to set up your development host to use Toaster and on how to use Toaster in general, see the Toaster User Manual.

3.3. Yocto Project Source Repositories¶

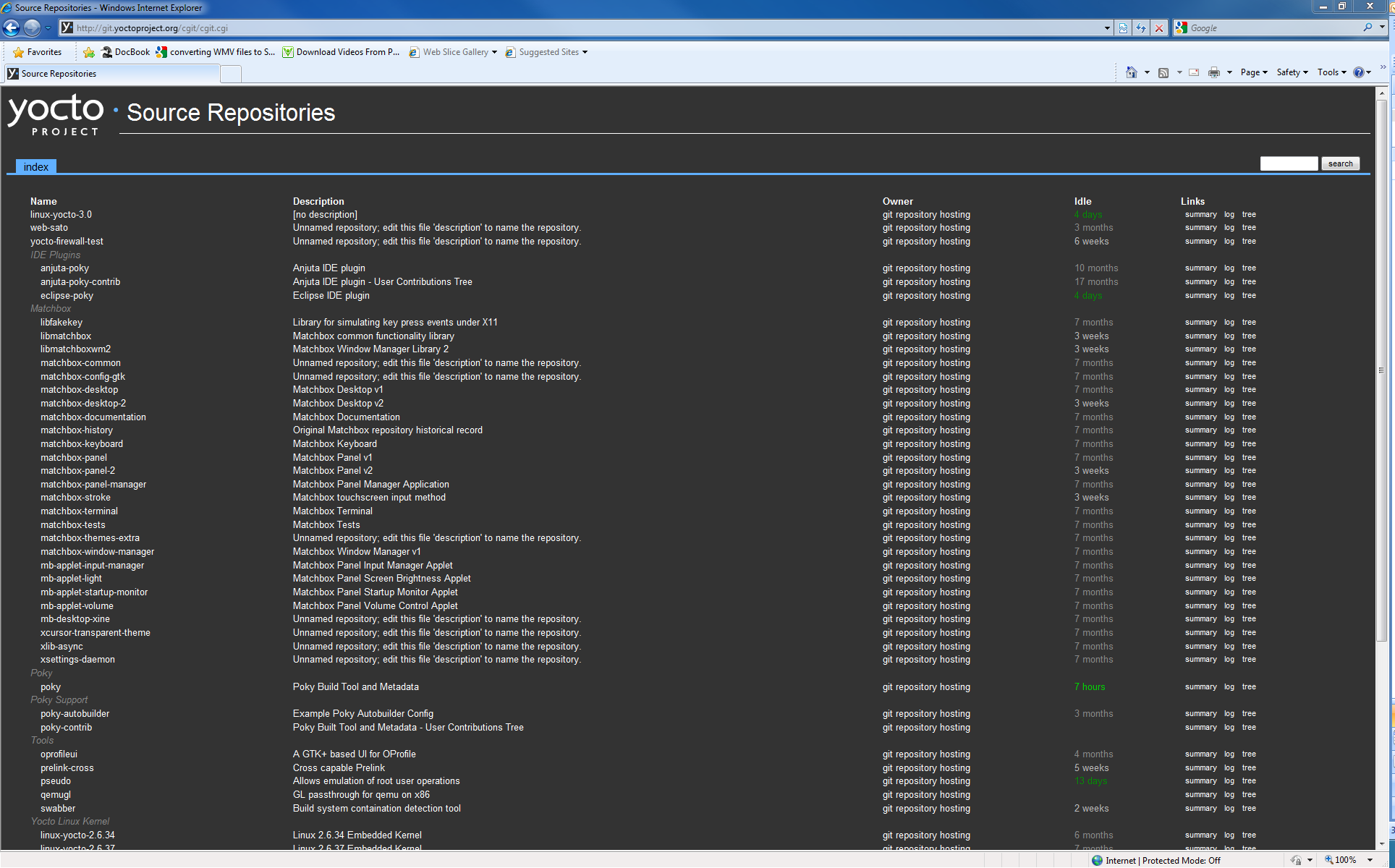

The Yocto Project team maintains complete source repositories for all Yocto Project files at http://git.yoctoproject.org. This web-based source code browser is organized into categories by function such as IDE Plugins, Matchbox, Poky, Yocto Linux Kernel, and so forth. From the interface, you can click on any particular item in the "Name" column and see the URL at the bottom of the page that you need to clone a Git repository for that particular item. Having a local Git repository of the Source Directory, which is usually named "poky", allows you to make changes, contribute to the history, and ultimately enhance the Yocto Project's tools, Board Support Packages, and so forth.

For any supported release of Yocto Project, you can also go to the

Yocto Project Website and

select the "DOWNLOADS" item from the "SOFTWARE" menu and get a

released tarball of the poky repository, any

supported BSP tarball, or Yocto Project tools.

Unpacking these tarballs gives you a snapshot of the released

files.

Notes

The recommended method for setting up the Yocto Project Source Directory and the files for supported BSPs (e.g.,

meta-intel) is to use Git to create a local copy of the upstream repositories.Be sure to always work in matching branches for both the selected BSP repository and the Source Directory (i.e.

poky) repository. For example, if you have checked out the "master" branch ofpokyand you are going to usemeta-intel, be sure to checkout the "master" branch ofmeta-intel.

In summary, here is where you can get the project files needed for development:

Source Repositories: This area contains IDE Plugins, Matchbox, Poky, Poky Support, Tools, Yocto Linux Kernel, and Yocto Metadata Layers. You can create local copies of Git repositories for each of these areas.

For steps on how to view and access these upstream Git repositories, see the "Accessing Source Repositories" Section in the Yocto Project Development Tasks Manual.

Index of /releases: This is an index of releases such as the Eclipse™ Yocto Plug-in, miscellaneous support, Poky, Pseudo, installers for cross-development toolchains, and all released versions of Yocto Project in the form of images or tarballs. Downloading and extracting these files does not produce a local copy of the Git repository but rather a snapshot of a particular release or image.

For steps on how to view and access these files, see the "Accessing Index of Releases" section in the Yocto Project Development Tasks Manual.

"DOWNLOADS" page for the Yocto Project Website:

The Yocto Project website includes a "DOWNLOADS" page accessible through the "SOFTWARE" menu that allows you to download any Yocto Project release, tool, and Board Support Package (BSP) in tarball form. The tarballs are similar to those found in the Index of /releases: area.

For steps on how to use the "DOWNLOADS" page, see the "Using the Downloads Page" section in the Yocto Project Development Tasks Manual.

3.4. Git Workflows and the Yocto Project¶

Developing using the Yocto Project likely requires the use of Git. Git is a free, open source distributed version control system used as part of many collaborative design environments. This section provides workflow concepts using the Yocto Project and Git. In particular, the information covers basic practices that describe roles and actions in a collaborative development environment.

Note

If you are familiar with this type of development environment, you might not want to read this section.

The Yocto Project files are maintained using Git in "branches" whose Git histories track every change and whose structures provide branches for all diverging functionality. Although there is no need to use Git, many open source projects do so.

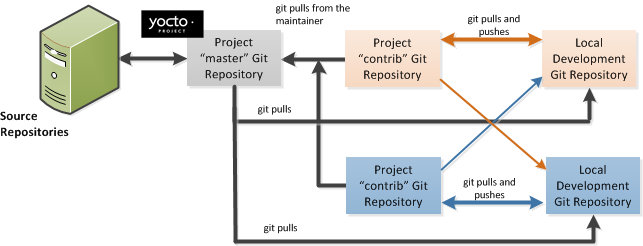

For the Yocto Project, a key individual called the "maintainer" is responsible for the integrity of the "master" branch of a given Git repository. The "master" branch is the “upstream” repository from which final or most recent builds of a project occur. The maintainer is responsible for accepting changes from other developers and for organizing the underlying branch structure to reflect release strategies and so forth.

Note

For information on finding out who is responsible for (maintains) a particular area of code in the Yocto Project, see the "Submitting a Change to the Yocto Project" section of the Yocto Project Development Tasks Manual.

The Yocto Project poky Git repository also has an

upstream contribution Git repository named

poky-contrib.

You can see all the branches in this repository using the web interface

of the

Source Repositories organized

within the "Poky Support" area.

These branches hold changes (commits) to the project that have been

submitted or committed by the Yocto Project development team and by

community members who contribute to the project.

The maintainer determines if the changes are qualified to be moved

from the "contrib" branches into the "master" branch of the Git

repository.

Developers (including contributing community members) create and maintain cloned repositories of upstream branches. The cloned repositories are local to their development platforms and are used to develop changes. When a developer is satisfied with a particular feature or change, they "push" the change to the appropriate "contrib" repository.

Developers are responsible for keeping their local repository up-to-date with whatever upstream branch they are working against. They are also responsible for straightening out any conflicts that might arise within files that are being worked on simultaneously by more than one person. All this work is done locally on the development host before anything is pushed to a "contrib" area and examined at the maintainer’s level.

A somewhat formal method exists by which developers commit changes and push them into the "contrib" area and subsequently request that the maintainer include them into an upstream branch. This process is called “submitting a patch” or "submitting a change." For information on submitting patches and changes, see the "Submitting a Change to the Yocto Project" section in the Yocto Project Development Tasks Manual.

In summary, a single point of entry exists for changes into a "master" or development branch of the Git repository, which is controlled by the project’s maintainer. And, a set of developers exist who independently develop, test, and submit changes to "contrib" areas for the maintainer to examine. The maintainer then chooses which changes are going to become a permanent part of the project.

|

While each development environment is unique, there are some best practices or methods that help development run smoothly. The following list describes some of these practices. For more information about Git workflows, see the workflow topics in the Git Community Book.

Make Small Changes: It is best to keep the changes you commit small as compared to bundling many disparate changes into a single commit. This practice not only keeps things manageable but also allows the maintainer to more easily include or refuse changes.

Make Complete Changes: It is also good practice to leave the repository in a state that allows you to still successfully build your project. In other words, do not commit half of a feature, then add the other half as a separate, later commit. Each commit should take you from one buildable project state to another buildable state.

Use Branches Liberally: It is very easy to create, use, and delete local branches in your working Git repository on the development host. You can name these branches anything you like. It is helpful to give them names associated with the particular feature or change on which you are working. Once you are done with a feature or change and have merged it into your local master branch, simply discard the temporary branch.

Merge Changes: The

git mergecommand allows you to take the changes from one branch and fold them into another branch. This process is especially helpful when more than a single developer might be working on different parts of the same feature. Merging changes also automatically identifies any collisions or "conflicts" that might happen as a result of the same lines of code being altered by two different developers.Manage Branches: Because branches are easy to use, you should use a system where branches indicate varying levels of code readiness. For example, you can have a "work" branch to develop in, a "test" branch where the code or change is tested, a "stage" branch where changes are ready to be committed, and so forth. As your project develops, you can merge code across the branches to reflect ever-increasing stable states of the development.

Use Push and Pull: The push-pull workflow is based on the concept of developers "pushing" local commits to a remote repository, which is usually a contribution repository. This workflow is also based on developers "pulling" known states of the project down into their local development repositories. The workflow easily allows you to pull changes submitted by other developers from the upstream repository into your work area ensuring that you have the most recent software on which to develop. The Yocto Project has two scripts named

create-pull-requestandsend-pull-requestthat ship with the release to facilitate this workflow. You can find these scripts in thescriptsfolder of the Source Directory. For information on how to use these scripts, see the "Using Scripts to Push a Change Upstream and Request a Pull" section in the Yocto Project Development Tasks Manual.Patch Workflow: This workflow allows you to notify the maintainer through an email that you have a change (or patch) you would like considered for the "master" branch of the Git repository. To send this type of change, you format the patch and then send the email using the Git commands

git format-patchandgit send-email. For information on how to use these scripts, see the "Submitting a Change to the Yocto Project" section in the Yocto Project Development Tasks Manual.

3.5. Git¶

The Yocto Project makes extensive use of Git, which is a free, open source distributed version control system. Git supports distributed development, non-linear development, and can handle large projects. It is best that you have some fundamental understanding of how Git tracks projects and how to work with Git if you are going to use the Yocto Project for development. This section provides a quick overview of how Git works and provides you with a summary of some essential Git commands.

Notes

For more information on Git, see http://git-scm.com/documentation.

If you need to download Git, it is recommended that you add Git to your system through your distribution's "software store" (e.g. for Ubuntu, use the Ubuntu Software feature). For the Git download page, see http://git-scm.com/download.

For information beyond the introductory nature in this section, see the "Locating Yocto Project Source Files" section in the Yocto Project Development Tasks Manual.

3.5.1. Repositories, Tags, and Branches¶

As mentioned briefly in the previous section and also in the "Git Workflows and the Yocto Project" section, the Yocto Project maintains source repositories at http://git.yoctoproject.org. If you look at this web-interface of the repositories, each item is a separate Git repository.

Git repositories use branching techniques that track content change (not files) within a project (e.g. a new feature or updated documentation). Creating a tree-like structure based on project divergence allows for excellent historical information over the life of a project. This methodology also allows for an environment from which you can do lots of local experimentation on projects as you develop changes or new features.

A Git repository represents all development efforts for a given

project.

For example, the Git repository poky contains

all changes and developments for that repository over the course

of its entire life.

That means that all changes that make up all releases are captured.

The repository maintains a complete history of changes.

You can create a local copy of any repository by "cloning" it

with the git clone command.

When you clone a Git repository, you end up with an identical

copy of the repository on your development system.

Once you have a local copy of a repository, you can take steps to

develop locally.

For examples on how to clone Git repositories, see the

"Locating Yocto Project Source Files"

section in the Yocto Project Development Tasks Manual.

It is important to understand that Git tracks content change and

not files.

Git uses "branches" to organize different development efforts.

For example, the poky repository has

several branches that include the current "sumo"

branch, the "master" branch, and many branches for past

Yocto Project releases.

You can see all the branches by going to

http://git.yoctoproject.org/cgit.cgi/poky/ and

clicking on the

[...]

link beneath the "Branch" heading.

Each of these branches represents a specific area of development. The "master" branch represents the current or most recent development. All other branches represent offshoots of the "master" branch.

When you create a local copy of a Git repository, the copy has the same set of branches as the original. This means you can use Git to create a local working area (also called a branch) that tracks a specific development branch from the upstream source Git repository. in other words, you can define your local Git environment to work on any development branch in the repository. To help illustrate, consider the following example Git commands:

$ cd ~

$ git clone git://git.yoctoproject.org/poky

$ cd poky

$ git checkout -b sumo origin/sumo

In the previous example after moving to the home directory, the

git clone command creates a

local copy of the upstream poky Git repository.

By default, Git checks out the "master" branch for your work.

After changing the working directory to the new local repository

(i.e. poky), the

git checkout command creates

and checks out a local branch named "sumo", which

tracks the upstream "origin/sumo" branch.

Changes you make while in this branch would ultimately affect

the upstream "sumo" branch of the

poky repository.

It is important to understand that when you create and checkout a local working branch based on a branch name, your local environment matches the "tip" of that particular development branch at the time you created your local branch, which could be different from the files in the "master" branch of the upstream repository. In other words, creating and checking out a local branch based on the "sumo" branch name is not the same as checking out the "master" branch in the repository. Keep reading to see how you create a local snapshot of a Yocto Project Release.

Git uses "tags" to mark specific changes in a repository branch

structure.

Typically, a tag is used to mark a special point such as the final

change (or commit) before a project is released.

You can see the tags used with the poky Git

repository by going to

http://git.yoctoproject.org/cgit.cgi/poky/ and

clicking on the

[...]

link beneath the "Tag" heading.

Some key tags for the poky repository are

jethro-14.0.3,

morty-16.0.1,

pyro-17.0.0, and

sumo-20.0.1.

These tags represent Yocto Project releases.

When you create a local copy of the Git repository, you also have access to all the tags in the upstream repository. Similar to branches, you can create and checkout a local working Git branch based on a tag name. When you do this, you get a snapshot of the Git repository that reflects the state of the files when the change was made associated with that tag. The most common use is to checkout a working branch that matches a specific Yocto Project release. Here is an example:

$ cd ~

$ git clone git://git.yoctoproject.org/poky

$ cd poky

$ git fetch --tags

$ git checkout tags/rocko-18.0.0 -b my_rocko-18.0.0

In this example, the name of the top-level directory of your

local Yocto Project repository is poky.

After moving to the poky directory, the

git fetch command makes all the upstream

tags available locally in your repository.

Finally, the git checkout command

creates and checks out a branch named "my-rocko-18.0.0" that is

based on the upstream branch whose "HEAD" matches the

commit in the repository associated with the "rocko-18.0.0" tag.

The files in your repository now exactly match that particular

Yocto Project release as it is tagged in the upstream Git

repository.

It is important to understand that when you create and

checkout a local working branch based on a tag, your environment

matches a specific point in time and not the entire development

branch (i.e. from the "tip" of the branch backwards).

3.5.2. Basic Commands¶

Git has an extensive set of commands that lets you manage changes and perform collaboration over the life of a project. Conveniently though, you can manage with a small set of basic operations and workflows once you understand the basic philosophy behind Git. You do not have to be an expert in Git to be functional. A good place to look for instruction on a minimal set of Git commands is here.

The following list of Git commands briefly describes some basic Git operations as a way to get started. As with any set of commands, this list (in most cases) simply shows the base command and omits the many arguments it supports. See the Git documentation for complete descriptions and strategies on how to use these commands:

git init: Initializes an empty Git repository. You cannot use Git commands unless you have a.gitrepository.git clone: Creates a local clone of a Git repository that is on equal footing with a fellow developer’s Git repository or an upstream repository.git add: Locally stages updated file contents to the index that Git uses to track changes. You must stage all files that have changed before you can commit them.git commit: Creates a local "commit" that documents the changes you made. Only changes that have been staged can be committed. Commits are used for historical purposes, for determining if a maintainer of a project will allow the change, and for ultimately pushing the change from your local Git repository into the project’s upstream repository.git status: Reports any modified files that possibly need to be staged and gives you a status of where you stand regarding local commits as compared to the upstream repository.git checkoutbranch-name: Changes your local working branch and in this form assumes the local branch already exists. This command is analogous to "cd".git checkout –bworking-branchupstream-branch: Creates and checks out a working branch on your local machine. The local branch tracks the upstream branch. You can use your local branch to isolate your work. It is a good idea to use local branches when adding specific features or changes. Using isolated branches facilitates easy removal of changes if they do not work out.git branch: Displays the existing local branches associated with your local repository. The branch that you have currently checked out is noted with an asterisk character.git branch -Dbranch-name: Deletes an existing local branch. You need to be in a local branch other than the one you are deleting in order to deletebranch-name.git pull --rebase: Retrieves information from an upstream Git repository and places it in your local Git repository. You use this command to make sure you are synchronized with the repository from which you are basing changes (.e.g. the "master" branch). The "--rebase" option ensures that any local commits you have in your branch are preserved at the top of your local branch.git pushrepo-namelocal-branch:upstream-branch: Sends all your committed local changes to the upstream Git repository that your local repository is tracking (e.g. a contribution repository). The maintainer of the project draws from these repositories to merge changes (commits) into the appropriate branch of project's upstream repository.git merge: Combines or adds changes from one local branch of your repository with another branch. When you create a local Git repository, the default branch is named "master". A typical workflow is to create a temporary branch that is based off "master" that you would use for isolated work. You would make your changes in that isolated branch, stage and commit them locally, switch to the "master" branch, and then use thegit mergecommand to apply the changes from your isolated branch into the currently checked out branch (e.g. "master"). After the merge is complete and if you are done with working in that isolated branch, you can safely delete the isolated branch.git cherry-pickcommits: Choose and apply specific commits from one branch into another branch. There are times when you might not be able to merge all the changes in one branch with another but need to pick out certain ones.gitk: Provides a GUI view of the branches and changes in your local Git repository. This command is a good way to graphically see where things have diverged in your local repository.Note

You need to install thegitkpackage on your development system to use this command.git log: Reports a history of your commits to the repository. This report lists all commits regardless of whether you have pushed them upstream or not.git diff: Displays line-by-line differences between a local working file and the same file as understood by Git. This command is useful to see what you have changed in any given file.

3.6. Licensing¶

Because open source projects are open to the public, they have different licensing structures in place. License evolution for both Open Source and Free Software has an interesting history. If you are interested in this history, you can find basic information here:

In general, the Yocto Project is broadly licensed under the Massachusetts Institute of Technology (MIT) License. MIT licensing permits the reuse of software within proprietary software as long as the license is distributed with that software. MIT is also compatible with the GNU General Public License (GPL). Patches to the Yocto Project follow the upstream licensing scheme. You can find information on the MIT license here. You can find information on the GNU GPL here.

When you build an image using the Yocto Project, the build process

uses a known list of licenses to ensure compliance.

You can find this list in the

Source Directory

at meta/files/common-licenses.

Once the build completes, the list of all licenses found and used

during that build are kept in the

Build Directory

at tmp/deploy/licenses.

If a module requires a license that is not in the base list, the build process generates a warning during the build. These tools make it easier for a developer to be certain of the licenses with which their shipped products must comply. However, even with these tools it is still up to the developer to resolve potential licensing issues.

The base list of licenses used by the build process is a combination of the Software Package Data Exchange (SPDX) list and the Open Source Initiative (OSI) projects. SPDX Group is a working group of the Linux Foundation that maintains a specification for a standard format for communicating the components, licenses, and copyrights associated with a software package. OSI is a corporation dedicated to the Open Source Definition and the effort for reviewing and approving licenses that conform to the Open Source Definition (OSD).

You can find a list of the combined SPDX and OSI licenses that the

Yocto Project uses in the

meta/files/common-licenses directory in your

Source Directory.

For information that can help you maintain compliance with various open source licensing during the lifecycle of a product created using the Yocto Project, see the "Maintaining Open Source License Compliance During Your Product's Lifecycle" section in the Yocto Project Development Tasks Manual.

Chapter 4. Yocto Project Concepts¶

Table of Contents

This chapter provides explanations for Yocto Project concepts that go beyond the surface of "how-to" information and reference (or look-up) material. Concepts such as components, the OpenEmbedded build system workflow, cross-development toolchains, shared state cache, and so forth are explained.

4.1. Yocto Project Components¶

The BitBake task executor together with various types of configuration files form the OpenEmbedded-Core. This section overviews these components by describing their use and how they interact.

BitBake handles the parsing and execution of the data files. The data itself is of various types:

Recipes: Provides details about particular pieces of software.

Class Data: Abstracts common build information (e.g. how to build a Linux kernel).

Configuration Data: Defines machine-specific settings, policy decisions, and so forth. Configuration data acts as the glue to bind everything together.

BitBake knows how to combine multiple data sources together and refers to each data source as a layer. For information on layers, see the "Understanding and Creating Layers" section of the Yocto Project Development Tasks Manual.

Following are some brief details on these core components. For additional information on how these components interact during a build, see the "OpenEmbedded Build System Concepts" section.

4.1.1. BitBake¶

BitBake is the tool at the heart of the OpenEmbedded build system and is responsible for parsing the Metadata, generating a list of tasks from it, and then executing those tasks.

This section briefly introduces BitBake. If you want more information on BitBake, see the BitBake User Manual.

To see a list of the options BitBake supports, use either of the following commands:

$ bitbake -h

$ bitbake --help

The most common usage for BitBake is

bitbake ,

where packagenamepackagename is the name of the

package you want to build (referred to as the "target").

The target often equates to the first part of a recipe's

filename (e.g. "foo" for a recipe named

foo_1.3.0-r0.bb).

So, to process the

matchbox-desktop_1.2.3.bb recipe file, you

might type the following:

$ bitbake matchbox-desktop

Several different versions of

matchbox-desktop might exist.

BitBake chooses the one selected by the distribution

configuration.

You can get more details about how BitBake chooses between

different target versions and providers in the

"Preferences"

section of the BitBake User Manual.

BitBake also tries to execute any dependent tasks first.

So for example, before building

matchbox-desktop, BitBake would build a

cross compiler and glibc if they had not

already been built.

A useful BitBake option to consider is the

-k or --continue

option.

This option instructs BitBake to try and continue processing

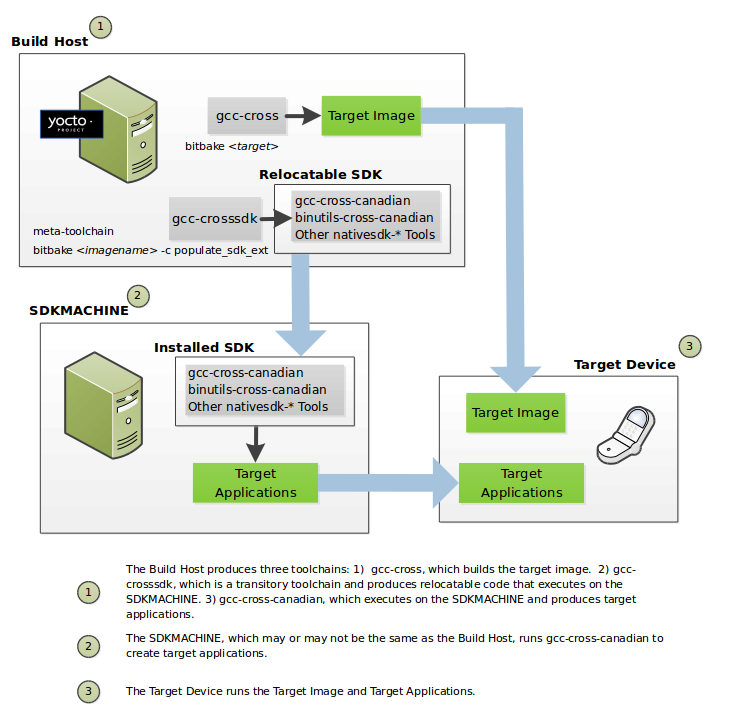

the job as long as possible even after encountering an error.